About the project

Objective

The PERCy project aims to develop a reference architecture, procedures and algorithms that facilitate advanced driver assistance systems and ultimately fully automated driving by fusing data provided by onboard sensors, off-board sensors and, when available, sensory data acquired by cellular network sensing. The fused data is then exploited for safety-critical tasks such as manoeuvring highly automated vehicles in public, open areas. This framework is motivated by the key observation that off-board sensors and information sharing extend the safe operational design domain achieved when relying solely on on-board sensors, thus promising to achieve a highly improved performance-safety balance.

Background

Advanced driver assistance systems – such as adaptive cruise control, autonomous emergency braking, blind-spot assist, lane keep assist, and vulnerable road user detection – are increasingly deployed since they increase traffic safety and driving convenience. These systems’ functional safety and general dependability depend critically on onboard sensors and associated signal-processing capabilities. Since advanced driver assistance systems directly impact the driver’s reactions and the vehicle’s dynamics and can cause new hazards and accidents if they malfunction, they must comply with safety requirements. The safety relevance of onboard sensors is even higher in the case of highly automated driving, where the human driver does not supervise the driving operation. However, current standards and methodologies provide little guidance for collaborative systems, leading to many open research questions.

Crossdisciplinary collaboration

This project is a collaboration between KTH, Ericsson and Scania.

About the project

Objective

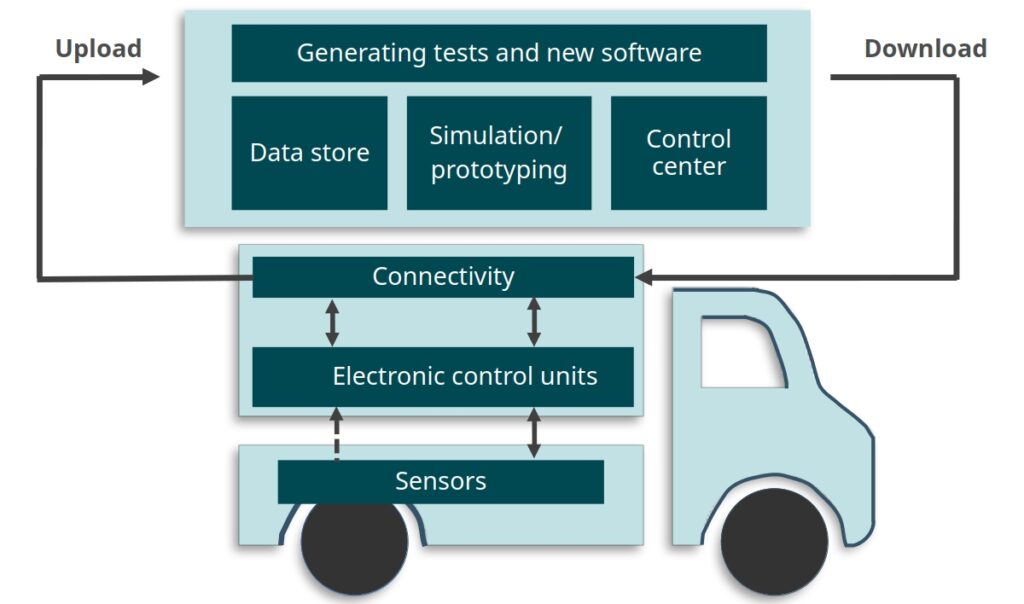

The overall objective of the project is to shorten the time needed for securely and safely deploying software updates to vehicles in the automotive industry. The project will address this in the context of software development for heavy vehicles at Scania and the toolchain for automated formal verification of code in the C programming language developed in the previous AVerT research project, called Autodeduct, which is based on the Frama-C code analysis framework and its ACSL contract specification language for C code.

As specific goals, the project will (i) extend Autodeduct with techniques for incremental formal verification as software evolves, (ii) extend the toolchain with support for non-functional requirements in software contracts focusing on safety and security properties relevant to Scania vehicles and including control flow and data flow, (iii) evaluate the techniques as implemented in the new toolchain on relevant code from Scania’s codebase to determine their efficacy, and (iv) develop a case study applying the new toolchain to a realistic software development scenario that demonstrates its applicability in an industrial setting.

Background

In the automotive industry, digitization means that vehicles increasingly depend on and are comprised of software components, leading towards software defined vehicles where most functions are primarily controlled by software. However, vehicle software components need to be continually revised by manufacturers to fix bugs and add functionality, and then deployed to vehicles in operation. Development and deployment of such software updates is currently demanding and time consuming—it may take months or even years for a new software component revision to reach vehicles.

Delayed time to deployment for software updates is in large part due to the long-running processes employed for assuring the revised software system meets its requirements, including legal requirements and requirements on safety and security. Currently, these processes often involve costly analysis of a system in a simulated or real environment, e.g., by executing an extensive suite of regression tests. The time required for running such tests can potentially grow with the size of the whole software system, e.g., as measured by lines of code in the codebase. Regression tests may also fail to consider non-functional properties such as security. The project aims to enable more rapid and trustworthy incremental development of software in heavy vehicles with guarantees of safety and security. Trust is built in the vehicle software development process by adopting tools with rigorous mathematical guarantees.

Crossdisciplinary collaboration

The project partner is Scania CV AB.

Background and summary of fellowship

Fundamental bounds of information processing systems provide the limit on theoretically possible achievable performances. For instance, in communications, the information-theoretic Shannon capacity describes the fundamental bound on what communication rate can be maximally achieved with vanishing error probability. This fundamental bound can be then used as a benchmark for the actual system design. It is therefore very valuable for the system design assessment of an actual system and the question of additional development work in the system design might be worth it or if a system change for further improvement would be a better strategy. In a privacy and security setting, the fundamental bounds describe what performances an adversary can achieve in the worst case. It therefore can be used to derive security or privacy guarantees which leads to security- or privacy-by-designs. Moreover, the proof of the fundamental bound often reveals what information-processing structure is the most promising strategy. It therefore often provides a deep understanding of information processing and guides towards efficient design structures. The results are often timeless and there are numerous interesting open problems that need to be solved.

In this project, we want to explore fundamental bounds for traditional communication scenarios, source coding setups, distributed-decision making, physical-layer security and privacy as well as statistical learning and data disclosure.

Background and summary of fellowship

One of the main causes of the insecurity of IT systems is complexity. For example, the Linux kernel has been designed to run on all possible platforms (including our IoT light bulb, the largest supercomputer, and the International Space Station) and includes all sorts of features to accommodate several usage scenarios. Even if the kernel is a foundational part of the majority of our software infrastructure and has been developed by high-quality engineers, this complexity results in 30 million lines of code that are virtually impossible to implement correctly. The kernel contains thousands of documented bugs and an unknown number of undiscovered issues. This leaves fertile ground for attackers that can steal our data, use our resources to mine bitcoins, or take complete control of our systems.

We believe that systems should be developed with much more rigorous techniques. We develop methods to mathematically model hardware and software systems and techniques to verify with mathematical precision the impossibility of vulnerabilities. Even if these techniques are heavy-duty, we focus our research on the analysis of the components that constitute the root of trust of the IT infrastructure, with the goal of demonstrating that faults of untrusted applications can be securely contained and that cannot affect the critical part of the system. That is, we do not aim to guarantee that PokenGo is bug-free, but we can mathematically rule out that its bugs can be used to steal your BankID or your crypto wallet. In particular, we are currently focusing on developing the theories to prevent recent famous vulnerabilities (e.g. Spectre) that are caused by low-level processor optimizations.

Background and summary of fellowship

Data has all the information, and efficient processing of data for information extraction is a key to achieving the right decision. Computers help to understand the data, extract important information and then finally provide a decision. Computers use a particular tool from the engineering field of computer science, called machine learning for realizing the help. The use of machine learning is growing, from speech recognition to robots to autonomous cars to medical fields including life science data analysis. Today machine learning is at the core of many intelligent systems across all science and engineering fields. Naturally, machine learning has to be highly reliable. Thanks to the Digital Futures fellowship, I am fortunate to address a challenge in modern machine learning. The challenge is how to make the machine learning fields more trustworthy and unbiased. For example, a visual camera-based face recognition system should not discriminate against people due to skin colour or gender.

Towards the challenge, a prime concern is to develop explainable machine learning (xML) systems, closely related to explainable artificial intelligence (xAI). Preferably, users should be able to understand what can be the precise effect or outcome of the systems before their formal use. Mistakes after formal use are costly in many situations, for example, detection of infection in a clinic/hospital. We should fully understand how computers use data for information extraction, and then reach a decision using the information. In turn, how computers can explain actions to users. The development of xML/xAI requires a confluence of mathematics, computer science and real-life understanding of applications scenarios including user perspectives.

Background and summary of fellowship

Wireless connectivity is a key enabler for the digital transformation of society, and we start to take its availability for granted. Although the wireless data speeds have grown tremendously, we still experience unreliable wireless coverage; for example, video streaming might work flawlessly until it suddenly stalls when you walk around a corner. The Digital Futures fellowship will enable my research group at KTH to tackle this challenge. We need to explore new ways of building wireless network infrastructure to make coverage holes an issue of the past.

Two particular solutions will be explored. The first is to spread out base stations over the city, instead of collecting them in towers, to increase the chance that every location is covered by some of them. The second solution is to make use of reconfigurable “mirrors”, which are thin plates that can be placed on buildings to reflect signals in controllable ways to remove coverage holes. These mirrors are not moved mechanically but change their electrical properties to achieve the same effect. The project will also explore how the “spill energy” from wireless signals can be utilized to power the batteries of devices, particularly internet-of-things equipment that is not operated by humans.

About the project

Objective

The project aims to model and predict the dynamic urban road traffic noise by integrating the data-driven microscopic traffic simulation and instantaneous noise emission and propagation models. It will use passive traffic and publicly available built environment data to demonstrate a high-fidelity dynamic traffic noise simulator with geographic information system (GIS) tools to predict and visualize noise levels at a time scale and geographic granularity previously unattained.

The DIRAC model and tool will pave the way for complex, dynamic urban road traffic noise modeling using passive traffic data, bridge the digital and physical urban world, and support ongoing efforts and collaborative decision-making of noise mitigation measures for a livable and healthy city, particularly for the growing demand of urban mobility for both people and goods in cities.

Background

Noise pollution is increasingly considered a major environmental issue in urban areas, with rapid urbanization (projected to reach 68% of the world’s population by 2050) in the context of the growing demand for mobility for people and goods in cities. It is recognized as a major cause of public health concerns, e.g., annoyance, sleep disturbance, other health effects (e.g., depression, anxiety, and mood swings), and decreased productivity. In particular, road traffic is deemed the major noise source in urban areas. Some 125 million people in the EU (32% of the total population) are estimated to be exposed to harmful traffic noise levels.

Several initiatives have, therefore, followed the European Noise Directive, mandating the development of urban noise maps. However, strategic noise maps exhibit strong limitations in view of noise exposure mitigation measures: long-time (yearly) averages based on traffic flow, static representations, source-rather than receiver-centric, and non-representative of transitioning vehicle fleet. They are limited in modeling and predicting fluctuations of noise levels over time under planning and management interventions, yet they are a fundamental tool to address specific dimensions of human health effects.

Built on the multidisciplinary team’s expertise and project in traffic simulation and traffic noise modeling, the DIRAC project aims to develop and demonstrate a high-fidelity road traffic noise simulation model in urban areas empowered by ubiquitous passive traffic and open-source data and Digital Twin models. For this, data-driven models work in parallel with real-life measurements to reproduce findings and predict the results of response actions. The models are agent-based (ABM) and open-source, enabling city stakeholders to recognize their roles and management models for informative decision-making of noise mitigation measures toward more livable and healthy cities.

Crossdisciplinary collaboration

The researchers in the team represent the KTH School of Architecture and Built Environment (ABE), Civil and Architectural Engineering Department, Transport Planning Division and KTH School of Engineering Science (SCI), Engineering Mechanics Department, The Marcus Wallenberg Laboratory for Sound and Vibration Research. The project is supported by strategic research partners at the KTH Center of Traffic Research (CTR), VTI (Swedish National Road and Transport Research Institute), Linköping University, and the University of Tartu.