Background and summary of fellowship

Behaviour Trees (BTs) represent a hierarchical way of combining low-level controllers for different tasks into high-level controllers for more complex tasks. The key advantages of BTs have been shown to include the following:

- Recursive structure: The BT is a rooted tree and at every edge of that tree, the interface between the parent and the subtree is the same, centred around a return status of either Success, Failure or Running.

- Modularity: Due to the recursive structure, a complex subtree can be seen as a single leaf, and vice versa. This enables the encapsulation of complexity.

- Transparency: The recursive structure of BTs makes them human-readable. You can always look at a BT and see why it is executing a particular behaviour. This fact in combination with the modularity described above enables a user to understand complex BTs by analyzing one subtree at a time.

- An efficient tool for human system design: BTs were created by computer game programmers to make their life easier when creating complex AI designs. Modularity is a well-known tool to handle complexity, and transparency is vital in any human design.

- An efficient tool for automated design. The modular recursive structure simplifies automated design.

- A structure that enables formal analysis of safety and convergence. Formal analysis of convergence and region of attraction is enabled by the modular recursive structure.

In this project, we will use the properties of BTs listed above to synthesize controllers that combine the efficiency of reinforcement learning with formal performance guarantees such as safety and convergence to a designated goal area.

Background and summary of fellowship

Reinforcement Learning (RL) is concerned with learning efficient control policies for systems with unknown dynamics and reward functions. RL plays an increasingly important role in a large spectrum of application domains including online platforms (recommender systems and search engines), robotics, and self-driving vehicles. Over the last decade, RL algorithms, combined with modern function approximators such as deep neural networks, have shown unprecedented performance and have been able to solve very complex sequential decision tasks better than humans. Yet, these algorithms are lacking robustness, and are most often extremely data inefficient.

This research project aims at contributing to the theoretical foundations for the design of data-efficient and robust RL algorithms. To this aim, we develop a fundamental two-step process:

- We characterize information-theoretical limits for the performance of RL algorithms (in terms of sample complexity, i.e., data efficiency)

- We leverage these limits to guide the design of optimal RL algorithms, algorithms approaching the fundamental performance limits

Background and summary of fellowship

In many application areas, it is not sufficient to present the output of machine learning models to the users without providing any information on what leads to the specific predictions or recommendations and how (un)certain they are. The strongest machine learning models are however often essentially black boxes. In order to enable trust in such models, techniques for explaining the predictions in the form of interpretable approximations are currently being investigated. Another cornerstone for enabling trust is that the uncertainty of the output of the machine learning models is properly quantified, e.g., that the output prediction intervals or probability distributions are well-calibrated.

Motivated by collaborations with Karolinska Institutet/University hospital on sepsis prediction, Scania on predictive maintenance and the Swedish National Financial Management Authority on gross domestic product (GDP) forecasting, techniques for quantifying uncertainty and explaining predictions will be developed and evaluated. In addition to scientific papers, the output of the project will be Python packages to support reliable machine learning, enabling predictions of state-of-the-art machine learning models to be complemented with explanations and uncertainty quantification.

Background and summary of fellowship

Social robots and virtual agents are currently being explored and developed for applications in a number of fields such as education, service, retail, health, elderly care, simulation and training and entertainment. For these systems to be accepted and successful, not only in task-based interaction but also to maintain user engagement, in the long run, it is important that they can exhibit varied and meaningful non-verbal behaviours, and also possess the ability to adapt to the interlocutor in different ways. Adaptivity in face-to-face interaction (sometimes called mimicry) has for example been shown to increase liking and affiliation.

This work addresses how style aspects in non-verbal interaction can be controlled, varied and adapted, across several modalities including speech, gesture and facial expression. The project entails novel data collection of verbal and non-verbal behaviours (audio, video, gaze tracking and motion capture) with rich style variation, but also makes use of existing datasets for base training. Synthesis models trained on this data will be based primarily on deep probabilistic generative modelling, conditioned with relevant style-related parameters. Multimodal generation paradigms, that produce congruent behaviours in more than one modality at a time, e.g. both speech and gesture, in a coherent style, will also be explored and evaluated in perceptual studies or experiments with real interactive contexts.

About the project

Objective

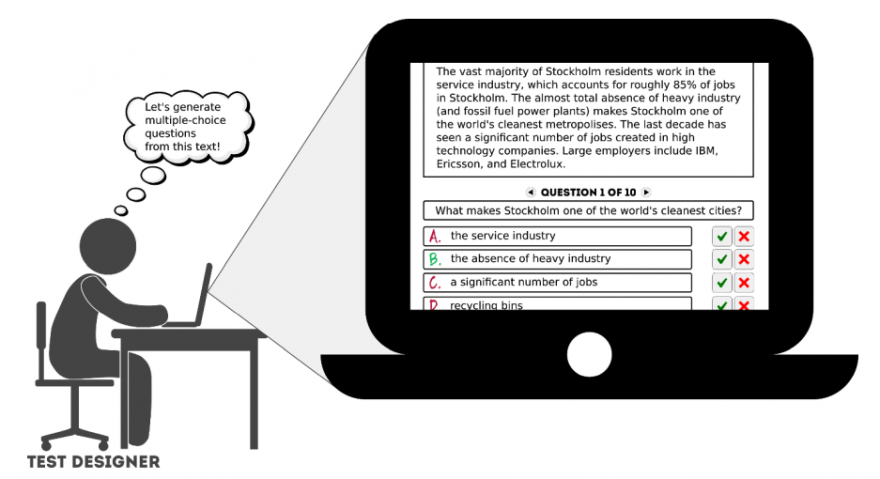

The objective of the Swedish Question Generation for Assessing Reading Comprehension (SWE-QUEST) project is to develop a demo system that, given a text, automatically generates multiple-choice reading comprehension questions on the text, as shown in the picture below.

Using a neural-network-based generative model, the demo system will generate the whole multiple-choice question, both the question itself and the answer alternatives. Although the example above was in English, our demo system will work for Swedish text. The project will push the state-of-the-art in natural language generation. The system is intended to be used by teachers of SFI (Svenska för invandrare) to facilitate test construction and the development of teaching materials. Still, it can also be used for self-studies of Swedish.

Background

The SFI students form a heterogeneous group, and the SFI classes are often too big to allow the teacher time to adapt the level of teaching to cater to each student individually. Therefore, developing such a tool could be highly useful. It would rapidly and easily generate several suggested multiple-choice questions (MCQ) on text material for teaching and assessment.

The task of automatically generating reading comprehension questions (without distractors) using neural methods has been studied before, primarily for English. There have also been some attempts at generating distractors, given the question and the correct answer, using neural methods. However, no attempt has been made to construct a trainable model that generates the whole MCQ in one go.

Crossdisciplinary collaboration

The researchers in the team represent the KTH Schools of Electrical Engineering and Computer Science, the Division of Speech, Music and Hearing and the Department of Swedish Language and Multilingualism at Stockholm University.

About the project

Objective

Susan’s ride on Campus2030 aims to demonstrate the potential of digitalization in reducing the carbon footprint and improving the cost-efficiency of the construction and transportation industry. With this objective, the project will establish a one-of-a-kind smart road infrastructure demonstrator on the KTH campus Valhallavägen for the integrated design, construction and operation of smart infrastructures. The demonstrator will incorporate a digital twin of the KTH campus, corresponding to multiple models and data sets that enable virtual assessment and experience of the Campus infrastructure while being validated and updated through real-time data feeds from various sensors. Our work in this direction can be seen on our testbed for Intelligent Transportation Systems on www.adeye.se. Susan’s ride will showcase the potential of edge computing, federated learning, and digital twins in the digital transformation of road construction and autonomous vehicle path planning.

Background

Autonomous vehicles, dynamic charging of electric vehicles and vehicle-to-infrastructure communication are just a few examples that require a systemic solution to function sustainably. Making the smart road sustainable requires a partnership between road owners, operators, electricity companies, vehicle manufacturers, transport and logistics companies, and technology suppliers in digitalization. Data will become a fundamental asset in this partnership. They must be collected through a combination of new sensors in the infrastructure already upon construction on smart vehicles, including construction machinery.

Crossdisciplinary collaboration

The researchers in the team represent the School of Electrical Engineering and Computer Science, KTH, the School of Architecture and the Built Environment, KTH and the School of Industrial Engineering and Management, KTH. The project leverages and extends research carried out in the Campus 2030 project and the TECoSA research centre.

About the project

Join the Second Drone Challenge at the Digital Futures Drone Arena, a one-of-a-kind interactive event with aerial drone technology! This year, the challenge focuses on moving with drones in beautiful, curious, and provocative ways – without needing to write a single line of code. The event takes place on May 16-17, 2023, at KTH’s Reactor Hall in Stockholm, Sweden. Read more about the challenge, the prizes, and how to sign up on our Drone Arena challenge website.

Objective

The Digital Futures Drone Arena is a concrete and conceptual platform where key players in digital transformation and society join in a conversation about the role and impact of mobile robotics, autonomous systems, machine learning, and human-computer interaction.

The platform is a novel aerial drone testbed, where drone competitions occur periodically to understand and explore the unfolding relationships between humans and drones. Aerial drones are used as an opportunity to create a foundation that lives past the end of this project. It is a long-standing basis for testing technical advances and studying, designing, and envisioning novel relationships between humans, robots, and their functioning principles.

Background

Few robot testbeds exist to experiment with application-level functionality. The Digital Futures Drone Arena bridges this gap by providing an easy-to-use programmable drone testbed for experimenting with novel drone applications and exploring the relations between humans and drones. The latter activity is driven by the concept of a ‘soma’ or the lived and felt body as it exists, moves, and senses the world. The theory provides an ethical stance on the soma, highlighting how technologies and interactions encourage certain movements and practices while discouraging others. As a critique of technology design and use, somaesthetics addresses the limited and limiting ways we sit at desks and tap away at keyboards. When we interact closely with drones, we must adapt to how we control them and move around them.

Crossdisciplinary collaboration

The researchers in the team represent the Connected Intelligence Unit, RISE and the Department of Computer and Systems Sciences, Stockholm University.

Articles: