About the project

Objective

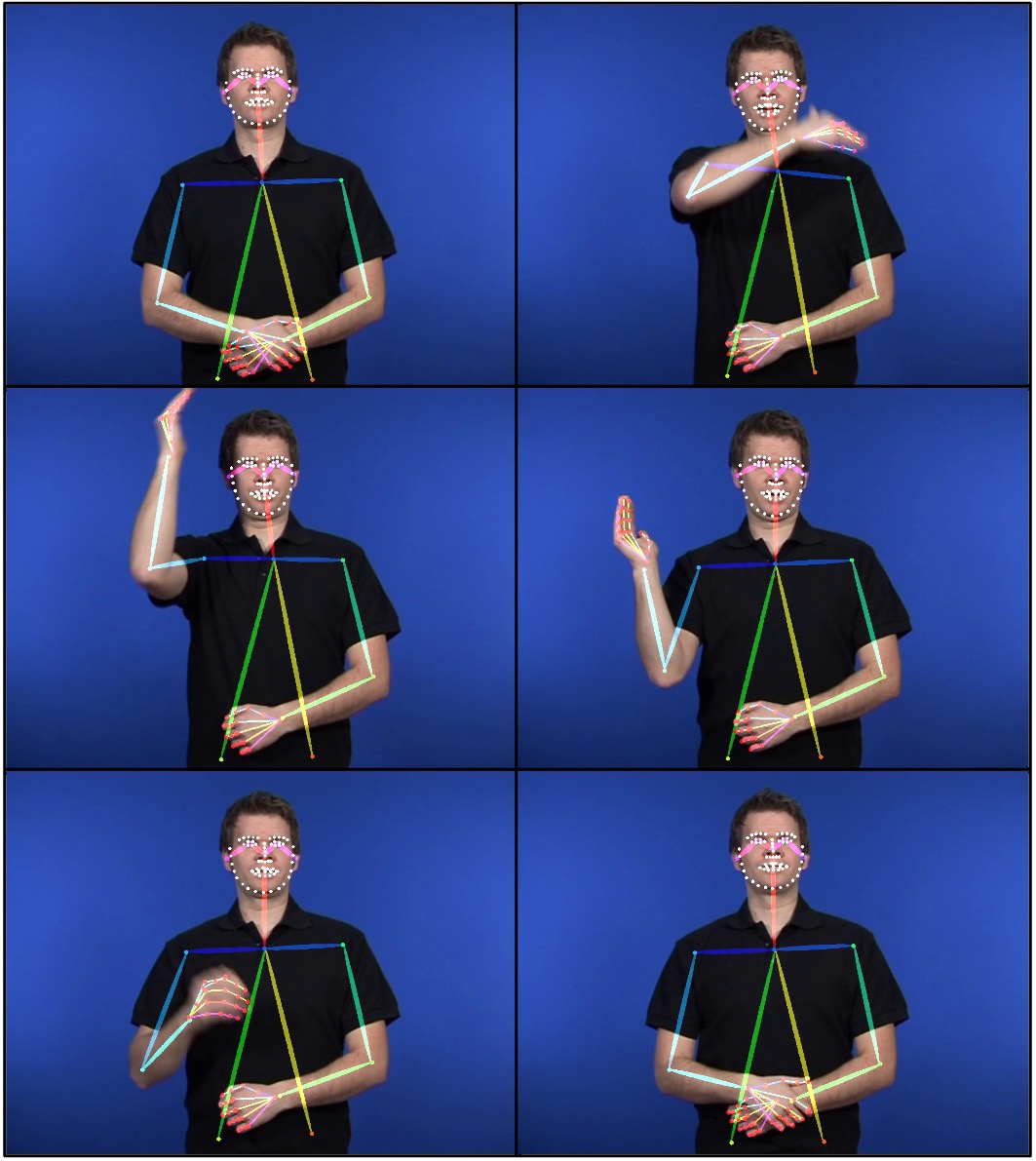

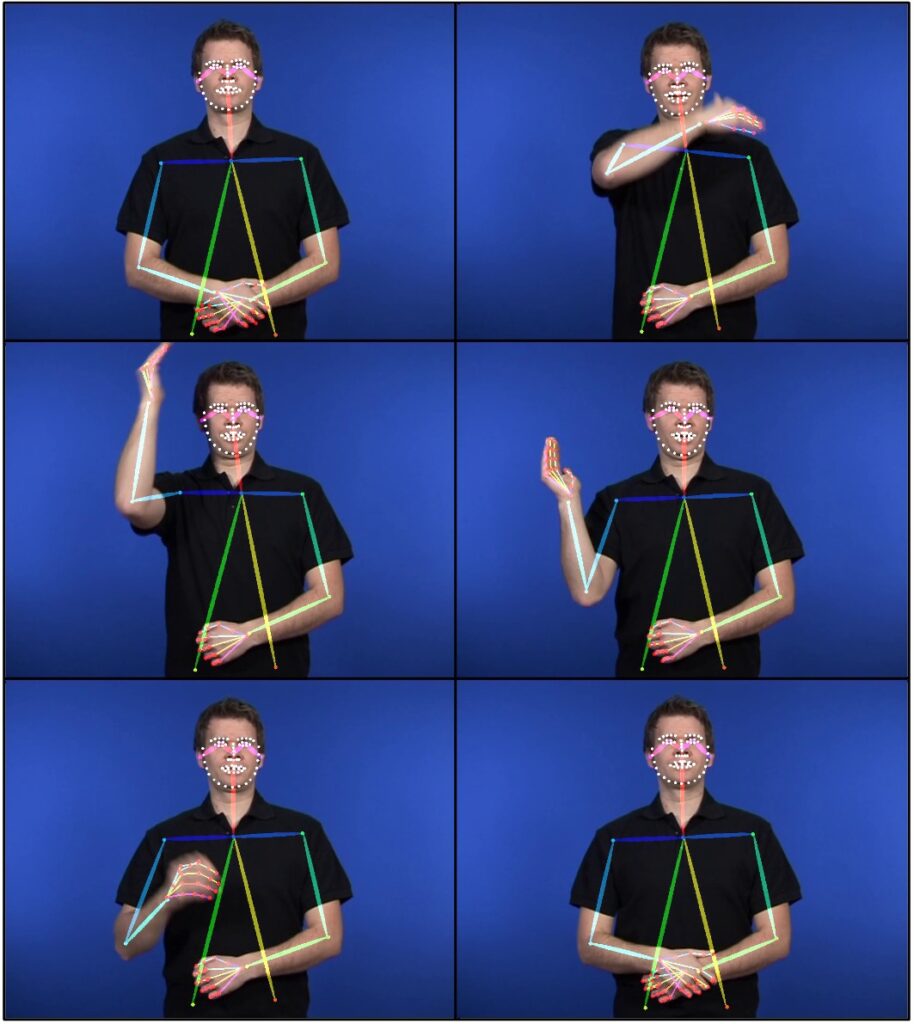

In this project we develop semantic tokenization models for sign language (SL), and combine these with large language models, leading to machine learning models that can understand, process, translate and generate SL efficiently. To achieve this, we will pool together large data resources, through our collaboration with SVT and other large-scale multilingual sign language datasets.

We will fine-tune and evaluate our models across a number of downstream tasks e.g., sign language recognition, segmentation, and production. The project addresses the need for inclusive and accessible urban infrastructures by reducing communication barriers for the deaf and signing communities, e.g. via automated translation and interpretation services that can enable seamless interaction between signers and non-signers, fostering integration in public services, workplaces, and social settings.

Background

Currently, we are seeing an AI-revolution fueled by large language models. What started out as text-only models has developed into generalized multimodal information processing frameworks, handling many languages, and different modalities such as images, speech and video, often with surprising accuracy.

Sign languages are visuo-spatial natural languages used by more than 70 million people world wide. Sign languages lack a text-based representation, and have not been part of, or benefited from, the large language model or foundation model developments. Furthermore, sign language technology is not being prioritized by the large corporate interests that are driving current AI developments.

Signed languages represent a special challenge since they, in contrast to spoken languages, have no universally adopted written form. For storage and transmission, one has to rely on video. For communication between signers and non-signers, either costly interpreter services are required, or one has to resort to limited text-based communication – which will be in a second language to a native signer. The promise and potential utility of sign language (SL) technology is thus substantial in terms of reducing communication barriers, allowing for signers to use language technology in their native language. Despite this, progress in SL technology has been limited in comparison to the rapid development for spoken languages. In this project we suggest an efficient way to allow sign language to inhabit the LLM ecosystem and benefit from the GPT-revolution.

Cross-disciplinary collaboration

The proposed project is a multidiciplinary endeavor. Advancing the state of the art in SL processing through foundation models requires combined expertise in machine learning, spoken language engineering, data processing and SL corpora and SL linguistics, including native sign-language users. The project team is composed to provide this expertise.