About the project

Objective

This research project will provide the foundations for Resilient Decentralized Computing: a new approach to rapidly build continuous, trusted and scalable applications with built-in resilience and privacy. While state-of-the-art cloud technologies require low-latency reliable networks at their core, the project proposes a decentralized computing abstraction inspired by biological structures, called “PODS”, which enables resilience and privacy even in decentralized networks of heterogeneous, often faulty devices that are geo-distributed and only partially online. PODS will be supported by a new programming system which will guarantee data consistency and privacy at compile time, even before software is executed.

Background

The prominent interest in big data technologies and cloud computing has led to significant advancements in how we can reliably store, process and provision data in controlled environments. These include managed data centers, that isolate human users from handling failure outbreaks and data consistency errors. In the context of smart cities and edge services, however, the majority of computing occurs at the “outskirts” of cloud data centers, namely the edge. Currently, the same level of maturity and isolation is yet to be reached in general-purpose software components that operate continuously outside managed cloud environments, yet encapsulate critical logic and actuation to real-world events. Creators and users of cloud and edge services today constantly face unintuitive complexities relating to data consistency, resilience to failures, scalability and privacy while working with non-declarative and low-level programming interfaces.

Crossdisciplinary collaboration

The researchers in the team represent the School of Electrical Engineering & Computer Science, KTH and the Division of Computer systems, RISE.

About the project

Objective

This project aims at developing smart autonomous power converters for optimal support of electric power systems. The project will build a new control framework based on digitalization and AI to provide optimal grid support functions and enable further integration of renewable energy sources. This is achieved by developing a novel combined optimization and control algorithm for coordination and an AI-based scheme for autonomous control of smart converters.

The outcome of this project is expected to enhance the resilience of electrical power systems with large-scale integration of renewables and add significant value to the digitalized electrical power industry.

Background

To achieve the national target of 100% renewables by 2040, renewables are increasingly integrated into electric power systems. Unfortunately, intermittent renewables increase the risk of grid instability with voltage fluctuation, frequency deviation and inertia issues, which limit the further integration of renewables. Renewable interface converters offer promising new methods to provide various functions for grid support. To efficiently and successfully utilize the grid support capabilities of the converters requires optimized coordination of many converters. However, optimal coordination is challenging due to limited communication support, multi-timescale operation, various real-time control actions, and computational complexity.

Crossdisciplinary collaboration

The researchers in the team represent the Department of Electrical Engineering at KTH EECS and the Department of Mathematics at KTH SCI.

About the project

Objective

Our proposal is to develop a programming system called “Portals” that combines the built-in trust of cloud data stream processing and the flexibility of message-driven decentralized programming systems. The unique design of Portals enables the construction of systems composed of multiple services, each built in isolation, so atomic processing and data-protection rights are uniformly maintained. Portals’ innovative approach lies in encoding data ownership, processing atomicity within data in transit, and exposing them to the programmer as core programming elements, namely “atoms” and “portals”. This will simplify complex systems engineering by multiple independent teams and enable a new form of serverless computing where any stateful service can be offered as a utility across cloud and edge.

Background

Enabling trust in distributed services is not an easy task. Trust comes in different forms and concrete challenges. For example, data systems can be trusted for their data-protection guarantees (e.g., GDPR), allowing users to access or delete their data or revoke access at any time. Trust can also be manifested in safety or consistency guarantees, enabling exactly-once processing semantics or serializable transactions. All such examples of implementing trust are extremely hard problems in practice that go way beyond the reach and expertise of system engineers, especially when implementing distributed services with multiple copies of the data being accessed simultaneously. Portals is a first-of-a-kind programming system that materializes all aspects of trust within its programming and execution model. Programs written in portals can scale and evolve while guaranteeing that all properties and invariants that manifest “trust” are constantly satisfied.

Another important driving need behind Portals is flexibility, accessibility and ease of use. A programming system is meant to simplify the work of its developers substantially. Serverless computing has been one of the rising concepts in cloud computing technologies in the last few years; that is, a model where developers can build and deploy their applications without worrying about the underlying infrastructure. However, current serverless platforms often have limitations regarding the types of applications that can be built and the ability to interface with persistent state. Portals aim to address these limitations by providing a message-driven decentralized programming system that allows developers to easily build and deploy a wide range of applications. The system’s unique design enables developers to focus on the business logic of their applications and the interdependencies while being oblivious to the decentralized underlying infrastructure or transactional mechanisms employed in the background.

Overall, Portals is a unique programming system that aims to simplify the work of developers while also enabling trust in distributed services. Its innovative approach combines the scalability, security and reliability of cloud data stream processing with the flexibility of actor programming systems. It enables a new form of serverless computing where any stateful service can be offered as a utility across cloud and edge environments.

Crossdisciplinary collaboration

The researchers in the team represent the School of Electrical Engineering at KTH and the Computer Science and Digital Systems Division, Computer Systems Lab at RISE Research Institutes of Sweden.

Portals Website: https://www.portals-project.org/

About the project

Objective

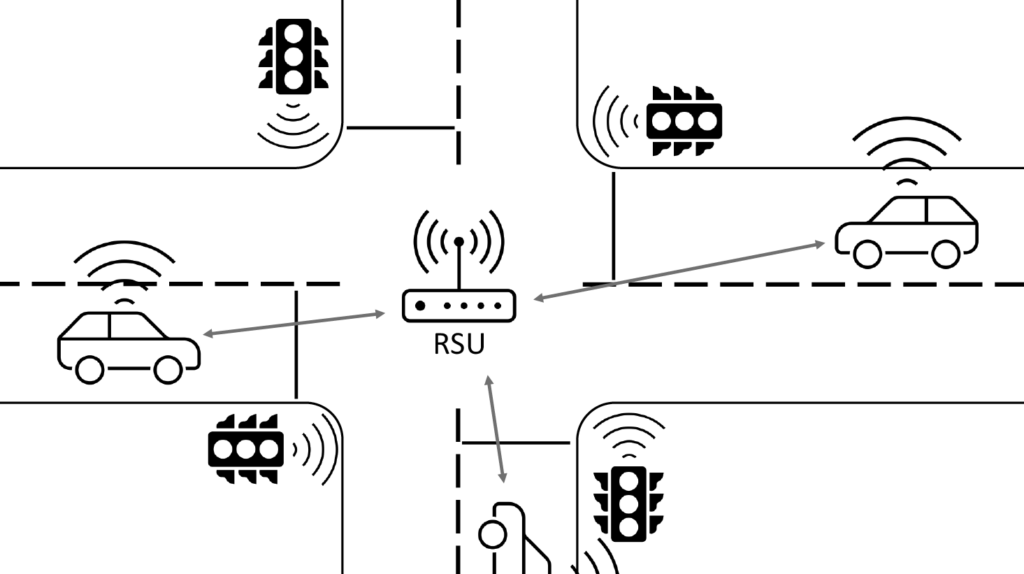

GPARSE aims to mitigate the harm of collisions on road intersections proactively and reactively using edge infrastructure. The proactive approach orchestrates traffic arrival at the intersection using a novel concept of safety zones to avoid collisions. If a collision is nevertheless likely, the reactive approach enables the roadside unit to provide resources to the affected vehicles. This includes contingency path planning considering the safety zones and aggregated sensor data of the intersection for a complete view that cannot be obtained by the vehicles’ sensors alone. To achieve this, GPARSE will focus on nominal and contingency path planning, considering the novel concept of safety zones and the available information the roadside unit provides. This must be done to guarantee timely interaction between the different actors at the intersection. Therefore, GPARSE will develop techniques to allow the roadside unit to provision computation resources for the different types of workloads that emerge from sensors, pro-active tasks and reactive tasks so that the different timing constraints of the workloads are met. This is complicated by these constraints, often spanning across several chains of different computation tasks and platforms.

Background

Low-speed collisions at intersections are common but can still lead to permanent impairment – in particular for women. Rudimentary driver assistance systems can reduce the severity of injuries by braking, but more advanced mitigation of unavoidable collisions faces several challenges. In particular, no single vehicle has the necessary overview, other traffic that interferes with the situational awareness of driver assistance systems and can be involved in secondary collisions, and the short timeframes involved. Contingency path planning, i.e. the reconfiguration of unavoidable collisions to decrease the resulting harm, must be able to estimate the collision parameters of the involved actors at runtime. This is challenging in the general case and has a large effect on the outcome of the approach. At the same time, computation platforms on vehicles, as well as roadside units, must schedule heterogeneous workloads in such a way that diverse timing constraints are met. Therefore, online orchestration of dynamic and static workloads under temporal constraints across all compute nodes is necessary.

Crossdisciplinary collaboration

The researchers in the team represent the School of Engineering Engineering and Computer Science (ECS), KTH and the School of Industrial Engineering and Management (ITM), KTH.

About the project

Objective

This research project aims to realize faster-than-real-time (simulation time less than physical flow time) and high-resolution fluid flow simulation in engineering applications, with indoor climate as a pilot. The expected outcome of this project is a static CNN for super-resolution to achieve fast prediction of steady-state indoor airflow, a hybrid RCNN for super-resolution to achieve faster-than-real-time prediction of transient indoor airflow and standards for low-resolution input data by numerical simulation and experimental data. Besides the indoor flow simulations, this project would open a broad spectrum of engineering accurate computational fluid dynamics (CFD) applications complementary to today’s standard application of RANS turbulence models.

Background

Fast, high-resolution heat and mass transfer prediction are critical in many engineering applications. For example, in creating a desired indoor climate, fast and high-resolution airflow and contaminant transport simulation would help sustain life, reduce cost, and minimize energy consumption. In specific scenarios such as emergency management, conceptual design, heating, ventilation, air conditioning and refrigeration (HVAC&R) system control, faster-than-real-time simulation is desired.

Borrowed from computer vision and image recognition, super-resolution is a promising novel approach for the fast analysis of fluid mechanics problems. Super-resolution refers to techniques that obtain a high-resolution flow image output from a low-resolution flow image input. However, super-resolution implementation in real engineering applications faces two major challenges. Firstly, the input low-resolution flow data could be either obtained by fast and computationally inexpensive simulation, e.g., coarse grid CFD simulation, which might be associated with wrong physics or by experimental measurements, where the least number of sensors needed should be identified. Secondly, for transient indoor/outdoor airflow, super-resolution fails to extrapolate the flow fields that belong to a different statistical distribution. Therefore, this study aims to solve the two challenges that state-of-the-art super-resolution models face.

Crossdisciplinary collaboration

The researchers in the team represent the KTH of Architecture and the Built Environment, Department of Civil and Architectural Engineering, KTH School of Engineering Sciences, and Department of Engineering Mechanics.

About the project

Objective

DeepFlood aims to develop novel hybrid models and flood maps with water depth information to support real-time decision-making and present them to the Swedish and international scientific society and the stakeholders’ community. The research will be helpful for improving our fundamental understanding of SAR-based flood mapping by developing novel hybrid PolSAR-metaheuristic-DL models.

Background

Precise and fast flood mapping will help water resources managers, stakeholders, and decision-makers in mitigating the impact of floods. Rapid detection of flooded areas and information about water depth are critical for assisting flood responders, e.g., operation specialists, local and state authorities, etc., and increasing preparedness of the broader community through actions such as home risk mitigation and evacuation planning.

This project seeks to fill current knowledge gaps in flood management by enabling accurate and rapid flood mapping and providing water depth information using novel hybrid PolSAR-metaheuristic-DL models and high-resolution remote sensing data. It will also advance flood detection and support notification systems by identifying 1) bands and polarizations that contain the most information for detecting flooded areas in different land covers; 2) the most effective PolSAR features in each band for flood mapping; 3) whether the most informative PolSAR features are the same for different land covers; and 4) which of the widely used metaheuristic and DL models are most efficient for detecting flooded areas and estimate water depth.

Crossdisciplinary collaboration

The researchers in the team represent KTH Royal Institute of Technology and Stockholm University.

About the project

Objective

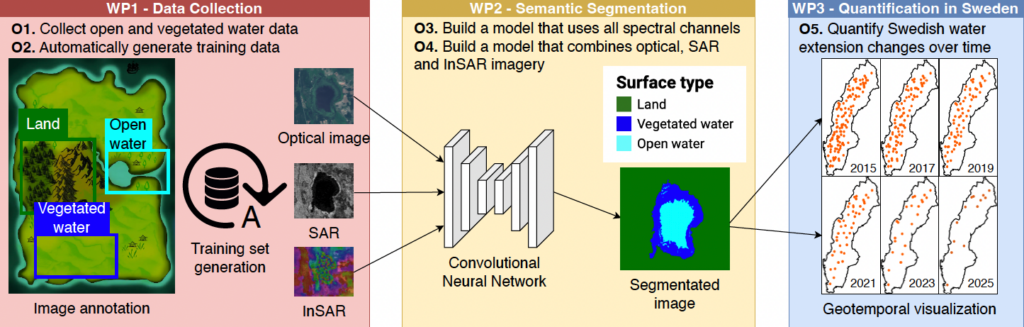

The main purpose of the DeepAqua project is to quantify the changes in surface water over time. We want to create a real-time monitoring system of changes in water bodies by combining remote sensing technologies, including optical and radar imagery, with deep learning techniques to perform computer vision and transfer learning. Employing this innovative strategy will allow us to calculate the water extent and level dynamics with unprecedented accuracy and response time speed. This approach offers a practical solution for monitoring water extent and level dynamics, making it highly adaptable and scalable for water conservation efforts.

Background

Climate change presents one of the most formidable challenges to humanity. In the current year, we have witnessed unprecedented heatwaves, extreme floods, an increasing scarcity of water in various regions, and a troubling surge in the global extinction of species. Halting the advance of climate change necessitates the preservation of our existing water resources. However, recent advancements in remote sensing technology have yielded a wealth of high-quality data, opening up new avenues for researchers to leverage deep learning (DL) techniques in water detection. DL is a machine learning methodology that consistently outperforms traditional approaches across diverse domains, including computer vision, object recognition, machine translation, and audio processing.

This project, named DeepAqua, seeks to enhance our understanding of surface water dynamics and their response to environmental changes by developing innovative DL architectures, such as Convolutional Neural Networks (CNN) and Transformers, designed specifically for the semantic segmentation of water-related images. It is worth noting that many DL models depend on substantial amounts of ground truth data, which can be costly to obtain. Our previous findings suggest that we can train a CNN using water masks based on the Normalized Difference Water Index (NDWI) to detect water in Synthetic Aperture Radar (SAR) imagery without the need for manual annotation. This breakthrough promises to have a significant impact on water monitoring since generating data based on NDWI masks is virtually cost-free compared to traditional methods involving fieldwork data collection and manual annotation.

Crossdisciplinary collaboration

The researchers in the team represent the Division for Water and Environmental Engineering (SEED/ABE), the Division of Software and Computer Systems (CS/EECS), KTH, and Stockholm University.