About the project

Objective

This research project aims to develop, implement, and validate an integrated AI-based predictive maintenance and life cycle assessment framework for smart buildings. Using the KTH Live-In Lab, a highly instrumented student residential facility in Stockholm, as a testbed, the project will leverage real-time data from over 150 sensors collecting data every 10 minutes to model degradation in HVAC, piping, and other technical systems. The goals are to:

- develop accurate machine learning models for predictive maintenance,

- link these models to dynamic LCA to estimate the net environmental and economic impacts, including the extension of building component lifespans and cost savings,

- evaluate trade-offs in sensor deployment.

- identify an optimal balance between performance improvement and environmental footprint.

Background

Buildings account for a significant portion of global energy consumption and greenhouse gas emissions, making them a critical focus for sustainability efforts. Digital solutions, such as building monitoring systems, provide opportunities to optimize energy use and operational efficiency. However, their environmental implications, including production, operation, and disposal, remain largely underexplored. This interdisciplinary research aims to address this gap by developing, implementing, and validating a novel framework that combines predictive maintenance with LCA. This framework will quantify the net environmental impacts of Information and Communication Technology (ICT) systems in buildings, offering a comprehensive approach to assess both benefits and burdens.

The project leverages the KTH Live-In Lab, a student housing testbed in Stockholm equipped with over 150 sensor data points recorded every 10 minutes, to harness real-time data and advanced machine learning algorithms. These tools will predict failures in building subsystems, such as heating, ventilation, and piping systems, with the goal of extending their functional lifespan, reducing maintenance costs, and optimizing energy and material use. Using LCA methodology, the study will critically evaluate the environmental costs of digital infrastructures, including sensors and communication hardware across their lifecycle. By introducing dynamic feedback between maintenance strategies and environmental performance, the project will provide insights into direct impacts and broader trade-offs, supporting sustainable building practices.

Crossdisciplinary collaboration

This project introduces a novel integration of AI-based predictive maintenance with real-time LCA modeling, which is bridging gaps between building engineering, environmental science, and digitalization. The collaboration between the research team enables the assessment of both operational performance and environmental trade-offs, producing actionable insights for industry and policy. With established access to the KTH Live-In Lab, the team benefits from a high-resolution sensor network and a sophisticated BMS, essential for testing and validating predictive models. Additionally, tools such as SimaPro/OpenLCA and KTH’s computing infrastructure support advanced modeling and simulations.

About the project

Objective

To develop DeepAqua-II, a robust, scalable deep-learning system for global surface-water monitoring using SAR time-series data.

Specific objectives (O1–O5):

- O1: Design a technique to normalize SAR pixel intensity values so models remain resilient to sensor adjustments.

- O2: Build a self-supervised semantic segmentation model using SAR time-series without needing optical data.

- O3: Add support for L-band SAR sensors to detect water under vegetation.

- O4: Quantify changes in surface-water extent across multiple climate regions for 2015–2027.

- O5: Communicate and disseminate results to maximize impact, including training and capacity building.

Background

Surface water is declining worldwide, requiring more accurate monitoring.

- Traditional monitoring depends on optical satellite imagery, which fails under clouds and vegetation.

- Existing SAR-based models require manual annotations and retraining whenever sensors change.

- The earlier DeepAqua project achieved strong performance but still depends on optical data and lacks resilience to sensor adjustments.

- The upcoming NISAR mission introduces L-band SAR, enabling deeper vegetation penetration.

- There is a global need for automated, scalable, optical-independent methods for long-term water-extent mapping.

Crossdisciplinary collaboration

Hydrology & Environmental Sciences

- Led by Professor Zahra Kalantari

- Expertise in water resources management, hydrology, climate-change impacts, and sustainability.

Computer Science & Machine Learning

- Led by Associate Professor Amir H. Payberah

- Expertise in scalable machine learning, deep learning, and time-series modeling.

Nature of collaboration

- Integrates SAR remote sensing, deep learning, and climate/land-water systems.

- Builds on joint results from DeepWetlands and DeepAqua.

- Enables a cooperative system for global-scale water monitoring.

About the project

Objective

The primary objective of this research is to discover methods for autonomously generating threat intelligence (TI) that empowers users to gain a competitive advantage over threat actors. The central focus of the study involves designing and developing LLM-powered telemetry systems, including web scrapers and honeypots, that are immune to AI-based attacks. These systems are instrumental in collecting early threat signals. Furthermore, a significant aspect of the project involves utilizing a comprehensive set of Large Language Model (LLM)-driven advanced analytics to generate actionable threat insights that elucidate the nature of the attacks.

Background

The world of cyber threat intelligence (CTI) is undergoing a profound transformation due to the emergence of AI-based cybercrime chatbots, attack agents, and malware. Traditional CTI solutions have proven ineffective against AI-powered exploit kits, necessitating the design of end-to-end AI-agents capable of reasoning threat intelligence in a ubiquitous fashion. While a significant number of academic papers were published on LLM-driven CTI techniques by 2025, these studies must be evaluated based on the national context.

Since AI-based threats pose a significant and tailored threat to every nation, the development of counter-AI systems from a national perspective as well as providing an opportunity for researchers to be equipped with AI in CTI expertise is crucial.

Crossdisciplinary collaboration

The researchers in this team hail from the Royal Hacking Lab at Cybercampus Sweden, the Division of Network and System Engineering (EECS/NSE) at KTH Royal Institute of Technology, and the Cybersecurity Unit at RISE Research Institutes of Sweden.

Upon successful completion of the research, the anticipated users include the Swedish Computer Emergency Response Team (CERT-SE) at MSB, the Cyber Defense Unit under the Division of Cyber Defence and C2 Technology at the Swedish Defence Research Agency (FOI), and the National Operations Department (NOA) at the Police Authority.

About the project

Objective

SambIoT provides strong solutions for secure and scalable 6G-enabled Ambient IoT, while being cognizant of sustainability. We pursue fundamental research on key enablers for scalable security for Ambient IoT. Our technical objectives are:

- System support and backscatter infrastructure for Ambient IoT devices

- Lightweight physical layer techniques for 6G network security

- Automated, large-scale deployment of Ambient IoT applications and data analysis via confidential computing in cloud-edge environments

- Scalable credential management as a service

Background

6G promises to revolutionize connectivity for billions of Internet of Things (IoT) devices with ultra-fast data, near-zero latency, far surpassing 5G. Ambient IoT, a central component of 6G, will enable a wide range of new IoT applications across industries; for smart cities, agricultural and environmental monitoring, smart and ubiquitous healthcare, environmental sustainability by advanced waste and resource management.

Scalability and security are the keys to the success of Ambient IoT. The promised vast device numbers and interconnectedness require efficient, reliable data processing solutions. Cloud and edge computing play a vital role in this context by handling computationally intensive tasks IoT devices cannot manage and enabling security-relevant tasks, from credential management support to secure data storage and processing.

Without security, many applications with high societal value (e.g., healthcare and smart grid) will not be deployed. Scaling applications with the number of devices increases measurement points, which, e.g., in smart buildings, leads to better control and hence larger energy savings. Moreover, devices themselves contribute to enhanced sustainability, running without batteries thereby reducing toxic waste, with more sustainable electronics and materials for future implementations.

Cross-disciplinary collaboration

The project develops basic 6G security and privacy technologies, along with enhanced capabilities for AI, smart cities, and healthcare. Ambient IoT per se contributes to enhanced sustainability.

PIs of the project

Panos Papadimitratos, Professor, KTH, EECS

Thiemo Voigt, Professor, RISE Computer Science

Musard Balliu, Associate Professor, KTH, EECS

About the project

Objective

This project aims to develop and demonstrate AgentVision, a novel video–language model that combines video-based deep learning with multimodal large language model (mLLM) reasoning to enhance environmental perception for autonomous driving. Focusing on pedestrian crossing intention prediction, the project includes developing and training AgentVision using open datasets, deploying it on KTH’s Research Concept Vehicle (RCV-E) at ITRL, and testing it at the Arlanda track. Through integration of contextual reasoning and real-time perception, the project seeks to improve safety, robustness, and trust in autonomous vehicle systems, providing a blueprint for future intelligent and cooperative mobility solutions.

Background

Autonomous vehicles (AVs) depend on visual perception for situational awareness, yet existing systems face major challenges in complex real-world environments. Traditional vision models struggle with limited visibility, occlusions, and ambiguous interactions among multiple agents. Moreover, deep learning-based perception relies heavily on large, high-quality datasets, limiting robustness and generalization in diverse traffic conditions. These challenges hinder accurate understanding of pedestrian behaviors and other dynamic elements essential for safe navigation. Therefore, developing more adaptable and context-aware perception systems is crucial to enhance reliability, interpret complex scenes, and ensure safety in intelligent transportation and autonomous driving applications.

Crossdisciplinary collaboration

The project is an interdisciplinary collaboration among KTH’s ABE, SCI, EECS schools and a startup company FleetMQ, integrating transport science and causal AI expertise to advance intelligent mobility innovation.

PI: Zhenliang Ma

Co-PI: Mikael Nybacka

About the project

Objective

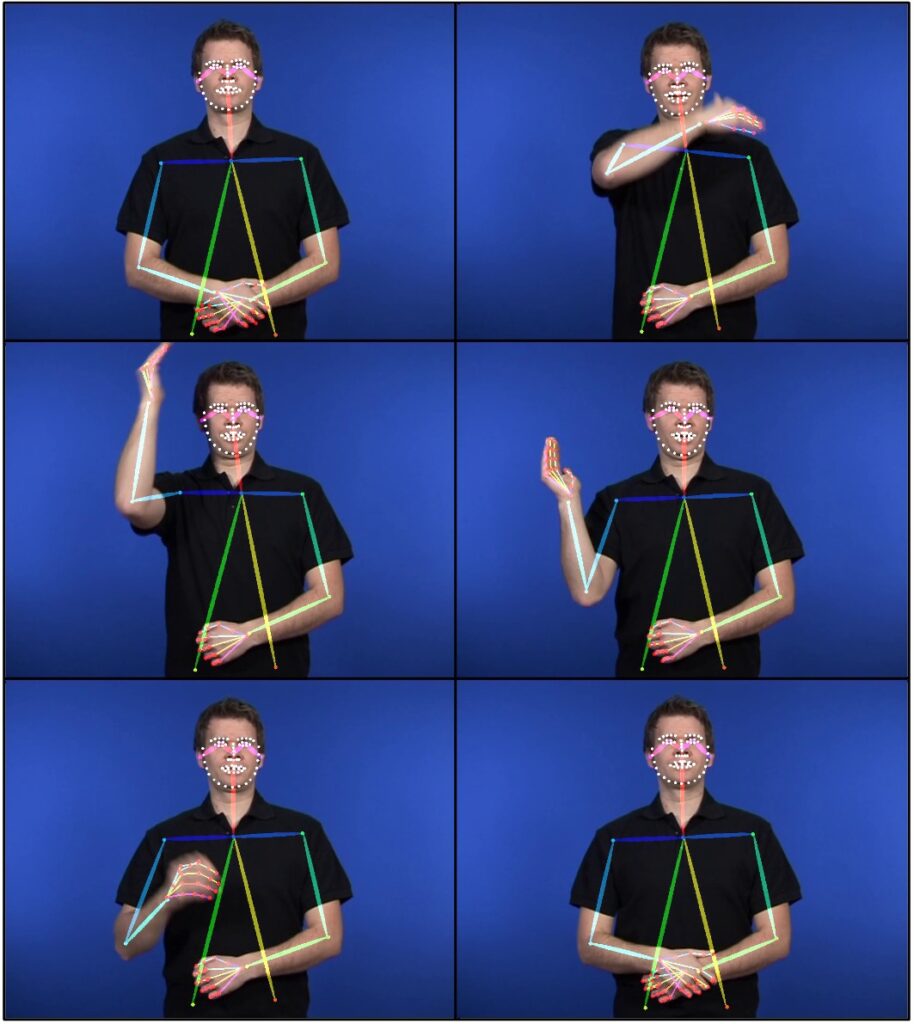

In this project we develop semantic tokenization models for sign language (SL), and combine these with large language models, leading to machine learning models that can understand, process, translate and generate SL efficiently. To achieve this, we will pool together large data resources, through our collaboration with SVT and other large-scale multilingual sign language datasets.

We will fine-tune and evaluate our models across a number of downstream tasks e.g., sign language recognition, segmentation, and production. The project addresses the need for inclusive and accessible urban infrastructures by reducing communication barriers for the deaf and signing communities, e.g. via automated translation and interpretation services that can enable seamless interaction between signers and non-signers, fostering integration in public services, workplaces, and social settings.

Background

Currently, we are seeing an AI-revolution fueled by large language models. What started out as text-only models has developed into generalized multimodal information processing frameworks, handling many languages, and different modalities such as images, speech and video, often with surprising accuracy.

Sign languages are visuo-spatial natural languages used by more than 70 million people world wide. Sign languages lack a text-based representation, and have not been part of, or benefited from, the large language model or foundation model developments. Furthermore, sign language technology is not being prioritized by the large corporate interests that are driving current AI developments.

Signed languages represent a special challenge since they, in contrast to spoken languages, have no universally adopted written form. For storage and transmission, one has to rely on video. For communication between signers and non-signers, either costly interpreter services are required, or one has to resort to limited text-based communication – which will be in a second language to a native signer. The promise and potential utility of sign language (SL) technology is thus substantial in terms of reducing communication barriers, allowing for signers to use language technology in their native language. Despite this, progress in SL technology has been limited in comparison to the rapid development for spoken languages. In this project we suggest an efficient way to allow sign language to inhabit the LLM ecosystem and benefit from the GPT-revolution.

Cross-disciplinary collaboration

The proposed project is a multidiciplinary endeavor. Advancing the state of the art in SL processing through foundation models requires combined expertise in machine learning, spoken language engineering, data processing and SL corpora and SL linguistics, including native sign-language users. The project team is composed to provide this expertise.

About the project

Objective

This project aims to explore the emerging gigantic multiple-input multiple-output (gMIMO) technology in the physical layer, using methodology from signal processing and information/communication theory. gMIMO relies on using 10 times more antennas in base stations and user devices than in current networks, by operating in the upper mid-band where antennas are smaller. This enables massive spatial multiplexing and beamforming. The research results are anticipated to be applied to 6G and future wireless communication networks and provide valuable insights into their integration within a wide range of digital transformation.

Background

Sixth-generation (6G) wireless communication is crucial for enabling society’s digital transformation and future cyber-physical world. Many transformative applications, such as ultra-massive connectivity, immersive extended reality (XR) communication, smart factories, and intelligent transportation systems, can be efficiently facilitated by intelligent 6G wireless networks. To support these extensive usage scenarios, delivering exceptionally high data rates to many users is important. Since fourth-generation (4G) wireless networks, multiple-input multiple-output (MIMO) technology has been a key technology to deliver higher rates.

With the advancement of wireless networks, MIMO technology has also significantly evolved with the continually increasing number of antennas. To further empower the 6G and future wireless networks, gigantic MIMO (gMIMO) is highly anticipated by utilizing hundreds or a thousand antennas, which is the major focus of this project.

About the Digital Futures Postdoc Fellow

Zhe Wang received his PhD degree in 2025 from the School of Electronic and Information Engineering at Beijing Jiaotong University, China. His research focuses on promising 6G wireless communication technologies, including gigantic MIMO, cell-free massive MIMO, and near-field communication.

Main supervisor

Emil Björnson, KTH

Co-supervisor

Vitaly Petrov, KTH