About the project

Objective

The project will develop a novel class of data-driven reduced-order models (ROM) that can represent wind farm flow dynamics with high-level accuracy, while being fast enough to support operational run-time analyses. The central aim is to bridge the gap between detailed computational fluid dynamics (CFD) simulations and the simpler models typically used in operational contexts, by employing CFD data to develop and train new machine-learning based models. The research will follow a modular and progressive strategy, starting from single turbine wake representation and then extending to farm-level interactions modeling.

Background

Wind farms operate in atmospheric conditions that vary across a wide range of spatial and temporal scales. At the farm scale, wakes develop and interact in ways that are difficult to capture with standard superimposition-based engineering models, especially if the site includes strong dependencies on terrain complexities, stability-driven variability, or farm-scale phenomena such as global blockage, which can contribute to systematic misprediction in terms of power forecasting and operating strategies.

High-fidelity CFD, for example large-eddy simulation, can capture these interactions at wind-farm scale, but the computational cost makes it impractical for real-time monitoring and frequent predictive analyses. By contrast, existing ROMs are often built on semi-empirical or engineering approximations that represent wakes through superposition of velocity deficits, deflections, and added turbulence. Although computationally efficient, these models often fail to capture complex wake-wake interactions, terrain-induced flow effects, stability dependent variability, and farm-scale phenomena such as blockage. Moreover, they are rarely designed to ingest real operational data, such as supervisory control and data acquisition (SCADA) logs, which encode the actual operating states of a wind farm. This motivates the need to bridge the gap between accurate but expensive simulations and fast but less reliable wake models, enabling near-real-time representations that can support forecasting, optimization, and future control strategies.

About the Digital Futures Postdoc Fellow

Filippo De Girolamo is a mechanical engineer and researcher working at the intersection of computational fluid dynamics and machine learning for wind energy applications. He holds a PhD in Energy and Environment from Sapienza University of Rome in Italy, with research focused on wind turbine flows and data-driven modeling of wake dynamics in offshore environments. He has developed experience across multiple wind energy problems, including wake and turbulence modeling with Large-Eddy Simulation, data-driven wake decomposition via unsupervised learning, and SCADA-based diagnostics for wind farm monitoring. He also carried out a research visit at the University of Texas at Dallas, working on high-fidelity simulations of real wind farms in complex terrain.

Main supervisor

Prof. Dan Henningson, KTH

Co-supervisor

Prof. Hedvig Kjellström, KTH

About the project

Objective

This project aims to develop a reliable and robust AI tool for early fault detection in power transformers. By combining physical laws with limited operational data, the project aims to predict the health and remaining lifetime of power transformers efficiently. The developed AI tool would enable proactive maintenance, reducing costs and enhancing power grid reliability.

Background

Power transformers play a silent but crucial role in our daily lives. They enable the transmission of electricity over long distances, powering homes, hospitals, industries, and public infrastructure. Although they usually operate unnoticed, transformers are subject to high stress and age over time. When a transformer fails, the consequences can be severe, including power outages, costly repairs, and safety risks. Today, many transformer failures happen because problems are detected too late, mainly due to limited and incomplete monitoring data.

To reduce these risks, various indicators are used, such as gas measurements, thermal images, vibration signals, and magnetic behavior, to assess a transformer’s condition. However, collecting large amounts of high-quality data from all these sources is difficult and expensive. Most existing AI methods depend heavily on large datasets, which limits their usefulness in real-world power systems. The project tackles this challenge by developing an AI model that combines data with physical knowledge about how transformers work. By embedding physics directly into the learning process, the model can learn meaningful patterns even from limited data.

The proposed approach uses a physics-enhanced multimodal neural operator framework that can combine information from multiple data sources and predict how long a transformer can continue to operate safely. The model is designed to be fast, reliable, and suitable for real-time monitoring. By enabling early fault detection and maintenance decisions, this research supports the digital transformation of power infrastructure and contributes to a more stable, efficient, and sustainable energy grid.

About the Digital Futures Postdoc Fellow

Abhishek Chandra is a Postdoctoral Research Fellow at the School of Electrical Engineering and Computer Science at KTH Royal Institute of Technology. He holds a PhD in Electrical Engineering from Eindhoven University of Technology, The Netherlands, where his research focused on developing AI tools for characterizing piezoelectric and magnetic materials. His academic background includes applied mathematics, scientific computing, and AI. Abhishek has received several prestigious fellowships and scholarships, including the Digital Futures Postdoctoral Fellowship at KTH and the Information and Knowledge Society Scholarship at Université de Lille. With expertise in scientific machine learning and energy systems, his work aims to bridge the gap between theoretical AI models and practical engineering applications in critical infrastructure.

Main supervisor

Prof. Dr. Lina Bertling Tjernberg, Full Professor, Department of Electric Power and Energy Systems, EECS, KTH.

Co-supervisor

Prof. Dr. Cristian Rojas, Full Professor, Department of Decision and Control Systems, EECS, KTH.

About the project

Objective

The aim of the project is to develop control theoretic tools that can handle coarse models. In particular, coarse models as typically found in synthetic biology. Mathematically, we capture ‘coarseness’ through topological dynamical systems theory and aim to provide control theoretic counterparts to well-established index theories. Developing the theory is a first step, a second step is to integrate these tools directly into data-driven pipelines.

Background

Several pressing biological questions of today have a strong control-theoretic component, e.g., we do not only want to describe a cancerous cell, we want to prescribe its dynamics. Compared to classical fields of engineering, biology usually lacks the type of models that contemporary control theory can handle well. Instead, biological models are typically coarse and largely qualitative. In this project we accept this coarseness, take a topological viewpoint and develop control theoretic tools at precisely this level of granularity. We focus in particular on genetic regulatory networks that can or should generate oscillations. This, because of the large practical and theoretical appeal.

About the Digital Futures Postdoc Fellow

Wouter Jongeneel is a control theorist fascinated by topology and the life sciences. He received his PhD in Electrical Engineering from EPFL in Switzerland. Prior to that, he received a MSc in Systems & Control from TU Delft in the Netherlands. His research is centered around understanding the interplay between structural features of a system and qualitative behaviour it can display.

Main supervisor

Karl Henrik Johansson, KTH

Co-supervisor

Martina Scolamiero, KTH

About the project

Objective

The project aims to establish the technical, organizational, and legal foundations for an AI-based feedback system that supports operators at Stockholm City’s Elderly Safety Call Center. The system will function as a co-pilot, offering insights, alerts, and structured performance feedback to strengthen decision-making and professional development. Central objectives include analyzing operators’ decision-making processes and operational challenges in high-stakes, time-critical situations.

Another key objective is to identify the legal, ethical, and technical boundaries for data exchange across municipal and regional healthcare infrastructures. These insights will guide the design and prototyping of the LLM-based feedback system that enables post-call analysis and continuous learning. Further on, the project outcomes will inform the future development of a real-time feedback system.

Background

Elderly safety call centers play a critical role in Sweden’s elderly care system. Operators handle acute welfare and emergency-related calls, coordinate between medical and elderly home care actors, and make rapid decisions regarding additional services or short-term interventions. As the service operates during evenings, nights, and weekends, when regular elderly care services have limited staffing, operators manage cases under greater uncertainty and time pressure. The work is carried out under significant pressure, guided by a multitude of rules, guidelines, and policies, yet operators receive little structured feedback on the quality or outcomes of their decisions.

At the same time, demographic developments increase the need for efficient decision-making. A rising need of elderly care is projected to grow substantially and will lead to increased staffing requirements in elderly care. Managing this development without compromising quality will require new forms of digital support, particularly for staff working in high-stakes and time-critical environments such as night and weekend operations.

Current CRM systems in elderly care call centers provide access to information but offer limited decision support, structured learning, or follow-up of outcomes. Facing a stressful decision-making processes with little support affects both staff well-being and the quality of services provided to older adults. Strengthening decision support has the potential to reduce errors, improve consistency, and enhance both staff and patient well-being, while also generating significant savings in public resources.

Against this backdrop, AI-powered feedback systems offer a promising avenue. Recent advances in large language models (LLMs) and data-driven communication analysis open new possibilities for post-call learning, performance feedback, and more systematic follow-up of decision outcomes. This project aims to address these challenges by developing an AI-based feedback system that supports operators in elderly safety call centers. By enabling structured post-call analysis, reflective learning, and improved decision-making, the project seeks to enhance operator support, strengthen the resilience of elderly care services, and contribute to a sustainable response to the demographic challenges ahead.

Cross-disciplinary collaboration

The project team combines expertise in health care logistics, sociological analysis, service- and systemic design, complex system modeling, AI and knowledge graph methods. This mix of competencies supports observational studies, joint exploration and co-creation in the call center and the development and training of LLM models. The work is carried out in close cooperation between KTH, the City of Stockholm, Region Stockholm, and Stockholm University.

PI: Sebastiaan Meijer, KTH, Department of Biomedical Engineering and Health Systems

Co-PI: Magnus Eneberg, KTH, Department of Engineering Design

About the project

Objective

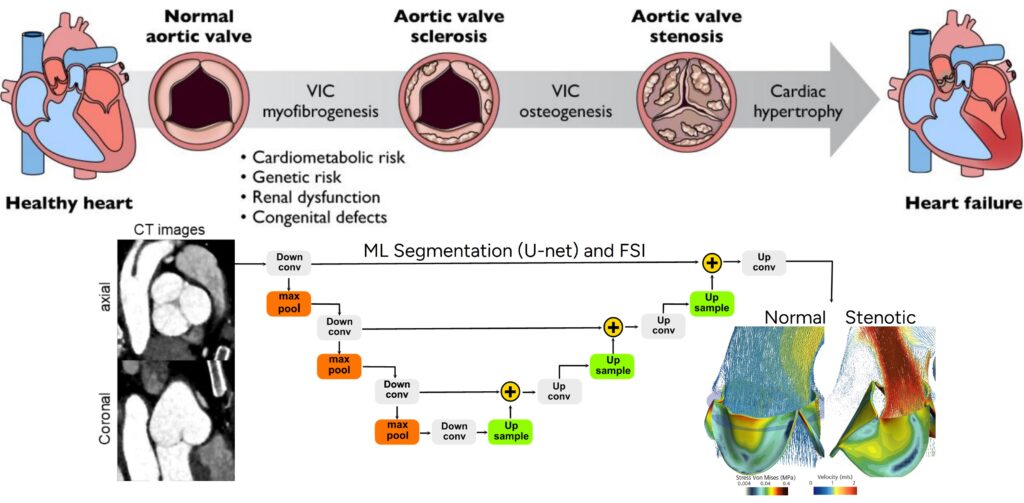

The overall objective of this project is to develop a predictive model for the progression of Aortic Valve Stenosis (AVS) by integrating repeated CT imaging, fluid–structure interaction (FSI) simulations, and deep machine learning techniques. Through comprehensive analysis of large-scale clinical datasets and high-fidelity simulations, this project seeks to move beyond conventional imaging and hemodynamic metrics to enable early detection, individualized prognosis, and improved clinical decision-making in AVS management.

Background

Aortic valve stenosis (AVS) is dangerous because it leads to obstructed blood flow from the heart, causing increased pressure within the heart and raising the risk of heart failure, arrhythmias, and other severe complications. Over 2 million people worldwide are affected by the condition, with its prevalence increasing as the population ages, and it is often diagnosed through imaging techniques such as echocardiography and CT scans. Treatment options include surgical and transcatheter aortic valve replacement (SAVR/TAVR), both aiming to relieve symptoms and improve heart function, though there is uncertainty in selecting the best approach for individual patients based on their health condition and the severity of the disease.

Therefore, there is a critical need for a physics-based understanding of the progression of AVS, as current recommendations of AVS management and monitoring do not fully account for the complex interaction of hemodynamics (blood flow) and biomechanics (tissue deformation), which drive valve degeneration and calcification. In particular, oscillatory shear index and high shear stress in certain regions of the valve and aorta, along with altered cyclic loading, contribute to the pathological changes in the valve, exacerbating stenosis and complicating treatment outcomes.

Cross-disciplinary collaboration

This project brings together a new cross-disciplinary and complementary collaboration uniting experts in cardiovascular imaging, fluid dynamics, and machine learning to advance predictive modeling of aortic valve disease:

- Elias Sundström, a researcher in Fluid Mechanics at the Department of Engineering Mechanics, KTH, specializes in developing computational models to elucidate the complex fluid–structure interactions that occur within the human body.

- Magnus Bäck, is a senior physician and Professor in Cardiology at Karolinska Institutet, Translational cardiology. Research focus on Aortic Valve Stenosis.

- Payam Esfahani, is a postdoctoral researcher at KI in Magnus Bäck’s research group. Research focus on using advanced AI/ML applied to biomedical imaging and will remain a key contributor to the development of the project’s methodologies.

About the project

Objective

The CARE-AI (Causal Adaptive Reasoning for Effective Hospital Admission Interventions) project aims to fundamentally change how hospitals prevent patient readmissions. Today’s AI systems can predict which patients are at high risk of readmission. However, they cannot tell doctors what to do to stop it.

This project moves beyond simple prediction to intervention optimization. We are developing a framework using ‘causal AI,’ a method that identifies cause-and-effect relationships, to answer the most important question: ‘Which specific intervention will most benefit this specific patient to avoid readmission?’ Rather than only marking a patient as high-risk, the CARE-AI system will guide clinicians in choosing the best, personalized steps. This could mean adjusting medication, setting up a follow-up appointment, or offering education tailored to the patient.

The two-year project has two main phases. In the first phase, we will develop the core causal AI algorithms—AI methods that determine which actions cause better outcomes for which group of patients—using comprehensive, anonymized health data from Region Stockholm’s electronic health records (EHR) and the VAL databases (VAL is a regional healthcare data). In the second phase, we will conduct a Randomized Controlled Trial (RCT) at Södersjukhuset. In this trial, 250-300 high-risk patients will be randomized to receive either standard care or personalized interventions guided by the new causal AI framework.

The ultimate goal of the project is a clinically validated AI tool that provides clear, interpretable recommendations to healthcare staff, leading to fewer readmissions, better patient outcomes, and more efficient use of healthcare resources.

Background

Hospital readmissions are a critical and costly challenge for healthcare systems worldwide. In Sweden, 15.2% of all patients are readmitted to the hospital within 30 days. This figure rises to an alarming 28% for elderly patients with multiple chronic conditions.

The core problem is that many of these readmissions are preventable. The “one-size-fits-all” approach to discharge and follow-up isn’t effective. Current predictive AI models fall short because they are based on correlation, not causation. They can identify that a patient looks like other patients who were readmitted. But they can’t determine why or identify the specific, modifiable factor that would change the outcome for that individual.

This project tackles that fundamental gap. We’re moving from asking “Who is likely to be readmitted?” to “Who is likely to benefit from which intervention to avoid readmission?” By identifying the causal drivers of readmission, we can target interventions that make a real difference. With each hospital bed costing approximately 12,000 SEK per day, preventing unnecessary readmissions is crucial for a sustainable healthcare system.

Cross-disciplinary collaboration

The CARE-AI project is built on a “triple-helix” collaboration. This approach brings together world-class expertise from academia, clinical healthcare, and industry. This structure is essential to ensure the project is not only scientifically rigorous but also clinically relevant and practically implementable.

- KTH Royal Institute of Technology (Academia): Karl Henrik Johansson, Prof., and Umar Niazi, researcher, provide the project’s methodological leadership. They will develop novel causal AI, machine learning, and reinforcement learning algorithms. These algorithms form the core of the CARE-AI framework.

- Karolinska Institutet / Södersjukhuset (Healthcare): Patrik Lyngå, Assoc. Prof., and Raffaele Scorza, M.D. and Ph.D., provide crucial clinical expertise and validation. They are responsible for designing and executing the RCT. This ensures the AI’s recommendations are medically sound, safe, and effective in a real-world hospital environment.

- Sirona Group (Industry): Jenny Censin, M.D. and Ph.D., provides deep implementation expertise and a pathway to real-world deployment. Sirona brings experience in healthcare data analytics and existing platforms like AINA. This will be key to integrating the new AI framework into current clinical workflows and health data systems.

This close collaboration ensures a constant feedback loop. Clinical needs from Södersjukhuset and implementation challenges from Sirona directly inform the AI development at KTH. At the same time, the new AI tools are immediately tested and refined in a clinical setting.

About the project

Objective

This project aims to advance, evaluate and demonstrate three interactive sonic prototypes designed to support sleep across three critical stages: sleep onset, the sleep period, and awakening. The SoundAsleep app recommends sleep soundscapes based on the user mood; the Sonic Blankets are interactive sonic textiles designed to support sleep through soothing auditory and tactile stimulation; and the SoundRise alarm fosters a gentle and natural awakening experience by emitting a gradually rising sound starting below the ambient room tone. Prototypes will be advanced through contextual adaptation, enhancing their personalisation and sonic versatility, and participatory design, engaging varied age groups and genders.

Background

Sleep disturbances are common conditions that impact both physical and mental health; yet few technologies exist to support sleep quality. Cultural and historical practices highlight sound’s therapeutic potential. In addition to singing and music, broadband noise, natural soundscapes, and specific tones are gaining popularity. But designing sound alone is insufficient. Interventions need to consider demographic and contextual factors, such as age, gender, and the physical environment, which substantially affect sleep quality. In recent years, sleep technologies have started to compete with traditional sleep interventions, yet many interventions remain under-evaluated.

This research aims to contribute to the development of non-pharmacological, low-risk, personalised and adaptive sleep interventions, while simultaneously enhancing public awareness of the role of sleep in health and wellbeing.

Crossdisciplinary collaboration

The researchers in the team represent the KTH School of Electrical Engineering and Computer Science, Department of Media Technology and Interaction Design, and KTH School of Engineering Sciences in Chemistry Biotechnology and Health, Department of Biomediacal Engineering and Health Systems.