About the project

Objective

DeepFlood aims to develop novel hybrid models and flood maps with water depth information to support real-time decision-making and present them to the Swedish and international scientific society and the stakeholders’ community. The research will be helpful for improving our fundamental understanding of SAR-based flood mapping by developing novel hybrid PolSAR-metaheuristic-DL models.

Background

Precise and fast flood mapping will help water resources managers, stakeholders, and decision-makers in mitigating the impact of floods. Rapid detection of flooded areas and information about water depth are critical for assisting flood responders, e.g., operation specialists, local and state authorities, etc., and increasing preparedness of the broader community through actions such as home risk mitigation and evacuation planning.

This project seeks to fill current knowledge gaps in flood management by enabling accurate and rapid flood mapping and providing water depth information using novel hybrid PolSAR-metaheuristic-DL models and high-resolution remote sensing data. It will also advance flood detection and support notification systems by identifying 1) bands and polarizations that contain the most information for detecting flooded areas in different land covers; 2) the most effective PolSAR features in each band for flood mapping; 3) whether the most informative PolSAR features are the same for different land covers; and 4) which of the widely used metaheuristic and DL models are most efficient for detecting flooded areas and estimate water depth.

Crossdisciplinary collaboration

The researchers in the team represent KTH Royal Institute of Technology and Stockholm University.

About the project

Objective

We propose to use hybrid testing to innovate data-driven solutions for cardiac assistance. The project aims to enable data-driven evaluation of novel cardiac support devices to allow a rich and healthy life for patients with cardiovascular disease.

Background

We are currently witnessing an epidemic of heart failure with a rising incidence in the general population worldwide (2–7%) and a mean survival of only five years. The last decade has seen tremendous advances in device-based treatment options. However, progress has stalled, with only one device being currently approved for use in humans.

At the same time, novel hybrid mock circulation loops have been developed, allowing physical device interaction with a digital model of the human cardiovascular system. Here at KTH, we built Sweden’s first cardiovascular hybrid mock circulation. In this way, we can mimic unprecedented amounts of virtual and physical implantations of potential candidates of cardiac assistive technologies. The hope is that machine learning approaches can aid in identifying the ideal position and actuation profile of the cardiac assist device of the future.

Crossdisciplinary collaboration

The researchers in the team represent the KTH School of Electrical Engineering and Computer Science and the School of Chemistry, Biotechnology, and Health.

About the project

Objective

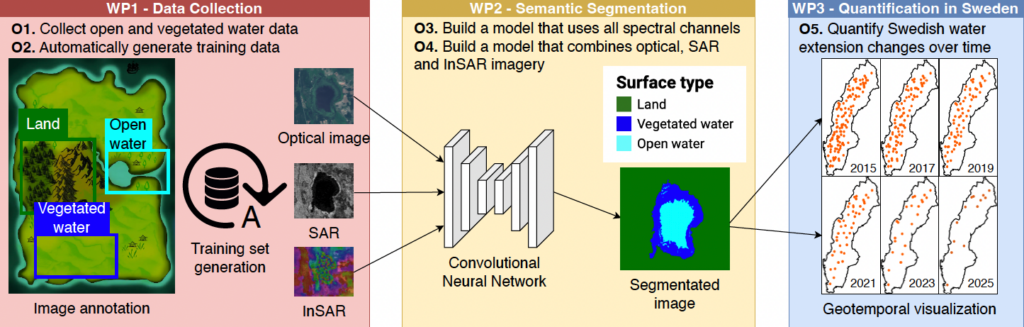

The main purpose of the DeepAqua project is to quantify the changes in surface water over time. We want to create a real-time monitoring system of changes in water bodies by combining remote sensing technologies, including optical and radar imagery, with deep learning techniques to perform computer vision and transfer learning. Employing this innovative strategy will allow us to calculate the water extent and level dynamics with unprecedented accuracy and response time speed. This approach offers a practical solution for monitoring water extent and level dynamics, making it highly adaptable and scalable for water conservation efforts.

Background

Climate change presents one of the most formidable challenges to humanity. In the current year, we have witnessed unprecedented heatwaves, extreme floods, an increasing scarcity of water in various regions, and a troubling surge in the global extinction of species. Halting the advance of climate change necessitates the preservation of our existing water resources. However, recent advancements in remote sensing technology have yielded a wealth of high-quality data, opening up new avenues for researchers to leverage deep learning (DL) techniques in water detection. DL is a machine learning methodology that consistently outperforms traditional approaches across diverse domains, including computer vision, object recognition, machine translation, and audio processing.

This project, named DeepAqua, seeks to enhance our understanding of surface water dynamics and their response to environmental changes by developing innovative DL architectures, such as Convolutional Neural Networks (CNN) and Transformers, designed specifically for the semantic segmentation of water-related images. It is worth noting that many DL models depend on substantial amounts of ground truth data, which can be costly to obtain. Our previous findings suggest that we can train a CNN using water masks based on the Normalized Difference Water Index (NDWI) to detect water in Synthetic Aperture Radar (SAR) imagery without the need for manual annotation. This breakthrough promises to have a significant impact on water monitoring since generating data based on NDWI masks is virtually cost-free compared to traditional methods involving fieldwork data collection and manual annotation.

Crossdisciplinary collaboration

The researchers in the team represent the Division for Water and Environmental Engineering (SEED/ABE), the Division of Software and Computer Systems (CS/EECS), KTH, and Stockholm University.

About the project

Objective

This project aims to establish an AI-based online platform for automated, and robust personalization and positioning of HBMs, focusing on baby HBMs. By this we eliminate the need for users to tackle personalization and positioning which is often challenging and tedious, thus the platform could be an tranformative tool for driving innovations relating to HBMs.

Background

Finite element HBMs are digitalized representations of the human body and have emerged as significant tools for driving industrial innovation and clinical applications. These models often are a baseline and in a specified position, and before the use of the HBMs, personalization and positioning of HBMs are needed. Despite continuous active development, HBM positioning remains challenging and tedious.

Crossdisciplinary collaboration

This project brings expertise within biomechanical modeling and artificial intelligence involving researchers from KTH School of Electrical Engineering and Computer Science and Applied AI at the Department of Industrial Systems at Research Institutes of Sweden (RISE).

Former project name: Virtual Baby Plattform

About the project

Objective

In collaboration with Karolinska University Hospital (KUH) and Karolinska Institute (KI), the PI and Co-PI of KTH propose the ISPP postdoc project EMERDENSY to develop trust-worthy machine learning algorithms with explainable outcomes and then use the algorithms for the design of Early Warning Systems (EWS).

Background

Artificial Intelligence (AI) can be used to detect infection. Often, doctors and nurses cannot be sure about the growth of infection due to the absence of clearly visible symptoms. Once infection starts, the body’s immune system starts to fight bacteria and viruses. Physiological parameters of the body, such as heart rate, blood pressure, breathing patterns, and temperature, change slowly.

AI can detect subtle changes, but humans cannot. AI can also predict infection type and patient deterioration. The medical care team will then spend precious time deciding on life-saving interventions. This heralds the use of AI-based early warning systems (EWS).

The big question: can we trust the AI systems, mainly its core called machine learning for data analysis and predictions? Can the machine learning algorithms explain their predictions to the healthcare personnel?

Partner Postdoc(s)

Yogesh Todarwal

Main supervisor

Saikat Chatterjee, Associate Professor, Division of Information Science and Engineering at KTH

Co-supervisor

Sebastiaan Meijer, Professor and Vice Dean, Division of Health Informatics and Logistics at KTH

About the project

Objective

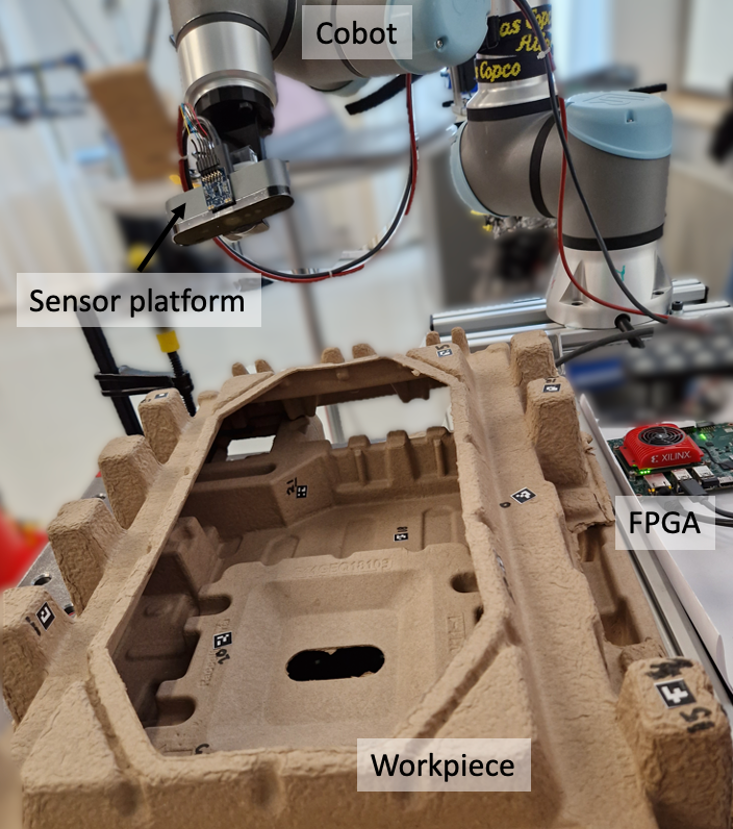

Atlas Copco is a world leader in safety-critical assembly tools widely used in industries like automotive, aviation, and mobile phones. To maintain its world-leading position, Atlas Copco would like to error-proof its handheld tightening tools to ensure that depending on the assembly, the bolts are tightened with the right tightening program and in the right sequence, and no bolts are left untightened. Atlas Copco would like to use an AI/ML-based sensor fusion system to achieve this. The form factor, power budget, latency, and cost requirements rule out the use of COTS like GPUs, TPUs, or FPGAs. ASICs (Application Specific Integrated Circuits) would meet these constraints, but they take too long, are very expensive, and require specialist competence that Atlas Copco lacks. KTH has developed a Lego-inspired design framework called SiLago that provides ASIC-comparable energy efficiency and form factor but allows non-specialists like mechatronic and AI/ML experts from Atlas Copco to create a ready-to-manufacture custom chip.

The objective of this demo project is to validate the claim of SiLago by showing that it can be used by non-specialists to implement this challenging AI/ML system and meet its constraints.

Background

Industry 4.0 or the Fourth Industrial Revolution refers to a significant transformation in how manufacturing and other industries operate, characterized by the integration of digital technologies, automation, data exchange, and smart systems. To align its product offerings with the Industry 4.0 vision, Atlas Copco has launched a pilot project to error-proof its tightening tools to improve the assembly process’s reliability, flexibility, and productivity. To achieve these objectives, it is developing an AI/ML-based sensor fusion system. Energy and cost-efficient implementation of such systems is a well-recognized challenge, and many large actors like Tesla, Google, etc. have opted for an ASIC-based design. However, the design cost of ASICs runs in 100s of MUSDs and requires large volumes and/or large profits to justify them. This often restricts ASICs as a solution to large actors.

To democratize access to ASIC-like efficiency for small actors and non-specialists, KTH has proposed a Lego-inspired design framework called SiLago. The impact of this demo project will be well beyond this specific use case from Atlas Copco; it will open the doors for ecologically and economically scalable energy-efficient digitalization in many sectors, including scientific super-computing.

Crossdisciplinary collaboration

This demo project spans multiple disciplines: mechatronics, AI and ML algorithms, sensor informatics, computer architecture, and VLSI design. It is a collaboration between KTH and Atlas Copco.

About the project

Objective

The project aims to develop an integrated digital infrastructure system to enhance the level of automation for smart construction. The initial goal will involve the creation of models for the digital twin of the robotic environment on construction sites. The digital twin will be used for remote real-time monitoring, prediction, optimization and multi-robot task planning and control. The results will be tested and applied to a practical Skanska use case.

Background

Construction sites today still rely to a large amount on manual labour, and the vision for the future is to leverage automation equipment (machines, robots) to the largest extent possible in order to speed up the production cycle, enhance quality while also reducing human risks, carbon emissions and costs. Smart construction, in essence, a flexible automation process, requires a stringent digitalization of the construction site in terms of real-time digital twins of products and production systems paired with advanced algorithms for the control of machines, the coordination of robots and the assurance of safety at the workspace.

The project strives to realise such systems, leading to fundamental research challenges and practical implementations in relevant use cases. The project developments can lay the foundation for future activities by forming and evolving a consortium nucleus.

Crossdisciplinary collaboration

The project partners are Ericsson AB, Skanska and KTH.