About the project

Objective

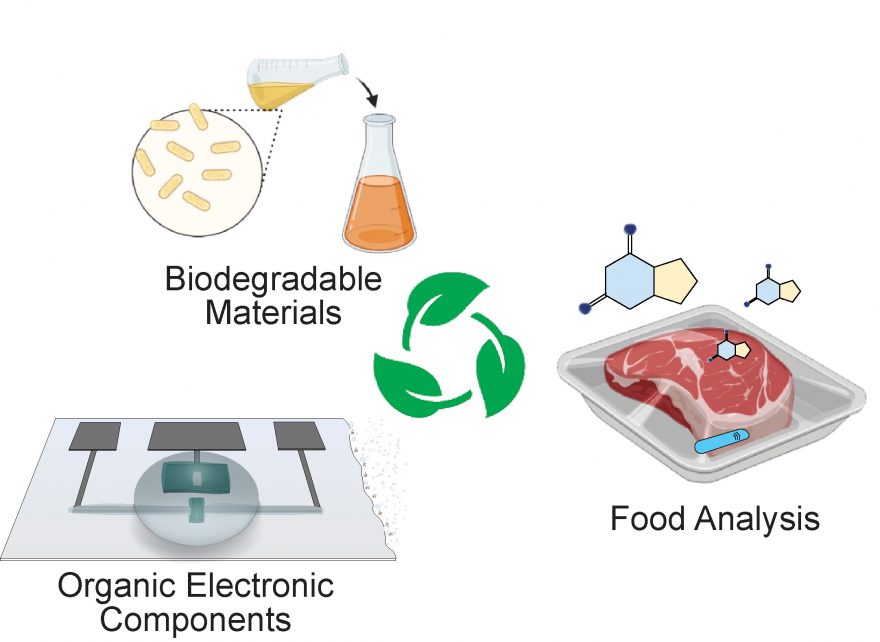

The project aims to develop biodegradable recording platforms for digital technologies that capture biological signals and safely integrate them into life and the environment. This project develops the first example of a biodegradable technology that degrade after disposal by the action of specific enzymes. To reach such a goal, we pursue an interdisciplinary approach combining expertise in materials chemistry and rational polymer design, organic electronics (device fabrication), and biology (biodegradation and toxicity).

Background

As electronics become increasingly integrated into our daily lives, there is a growing demand for technologies that decompose after a period of stable operation without leaving a permanent mark (transience). For consumer electronics, such as digital packaging, biodegradable solutions are set to contribute towards the Swedish and EU goal of reducing waste and promoting greener technologies by 2030. A key challenge is to develop components combining stable operation in environmental conditions (humidity, pH, etc.) and safe biodegradation into non-toxic products.

Cross-disciplinary collaboration

The researchers in the team represent the Department of Engineering Mechanics at KTH SCI and the Department of Fibre and Polymer Technology at KTH CBH.

About the project

Objective

The project aims to develop the digital twins of the personalized human neuromusculoskeletal system by integrating the latest developments in personalized neuromusculoskeletal modelling, innovative biomass-based printable electronics, and simultaneous 3D ultrasound imaging. The breakthrough feature of integrating ultrasound transparent electrodes (Ultrasonic electrodes) into the digital twins’ framework opens a new window in real-time monitoring and supervision in personalized rehabilitation.

Background

It is estimated that 15% of the world population live with one or more disabling conditions, and more than 26% of those over 60 years of age. Impaired motor function is one of the major disabilities in which persons may lose their ability to perform daily activities and experience reductions in health-related quality of life. The management of complex disability largely relies on rehabilitation. In clinical practice, the supervision and the evaluation of a rehabilitation motion pattern remain a medical and engineering challenge due to the lack of biofeedback information about the effect of the rehabilitation motion on individual human biological tissues and structures.

Crossdisciplinary collaboration

The researchers in the team represent the Department of Engineering Mechanics at KTH SCI and the Department of Fibre and Polymer Technology at KTH CBH.

About the project

Objective

This project aims at developing smart autonomous power converters for optimal support of electric power systems. The project will build a new control framework based on digitalization and AI to provide optimal grid support functions and enable further integration of renewable energy sources. This is achieved by developing a novel combined optimization and control algorithm for coordination and an AI-based scheme for autonomous control of smart converters.

The outcome of this project is expected to enhance the resilience of electrical power systems with large-scale integration of renewables and add significant value to the digitalized electrical power industry.

Background

To achieve the national target of 100% renewables by 2040, renewables are increasingly integrated into electric power systems. Unfortunately, intermittent renewables increase the risk of grid instability with voltage fluctuation, frequency deviation and inertia issues, which limit the further integration of renewables. Renewable interface converters offer promising new methods to provide various functions for grid support. To efficiently and successfully utilize the grid support capabilities of the converters requires optimized coordination of many converters. However, optimal coordination is challenging due to limited communication support, multi-timescale operation, various real-time control actions, and computational complexity.

Crossdisciplinary collaboration

The researchers in the team represent the Department of Electrical Engineering at KTH EECS and the Department of Mathematics at KTH SCI.

About the project

Objective

Extended Reality (XR) technologies offer remote colocated experiences, realistic interactions, and the potential for a climate-neutral society. However, understanding their impact on cultural and social behaviours, as well as addressing ethics, privacy, and security concerns, is crucial for both the public and industry. One of the major limitations of the current extended reality (XR) technology is its focus on single-user experience, mainly when other users are physically co-present. Previous criticism has also highlighted that VR experiences were primarily designed for the white, Western, male sensorial body, leading to biased and non-inclusive strategies.

This default design has caused harm to female bodies and abusive behaviour and significantly neglected gender differences XR experiences. Moreover, XR technologies present several ethical issues, e.g., technology’s privacy and security. Hence, the considerations of XR privacy and the ethical implications surrounding (unintentional) participation emerge as subjects of public concern within this project.

The project objectives are:

- To understand the relationship between the use of extended reality technologies and the requirements of society in terms of ethics, security, trustworthiness and inclusivity from a society-centric perspective.

- To explore users’ multisensory experiences using haptic feedback with a set of indoor and outdoor extended reality contexts as active and passive users.

- To derive a set of ethical guidelines based on controlled empirical evaluations with the aforementioned set of experiences and users’ reflections on them.

Crossdisciplinary collaboration

The researchers in the team represent the KTH School of Electrical Engineering and Computer Science and RISE Research Institutes of Sweden, Digital Systems Division.

About the project

Objective

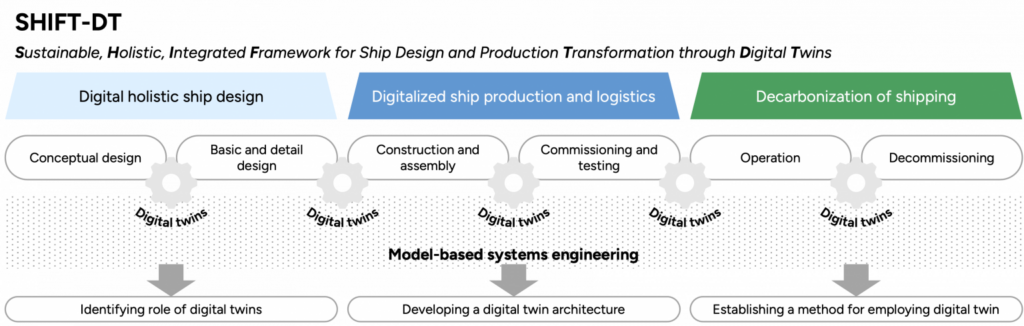

SHIFT-DT aims to transcend the current advancements in ship design and production technologies by establishing a framework that incorporates the decarbonization of shipping by marrying holistic ship design with digitalized ship production and logistics through the application of digital twins based on the model-based systems engineering method. This novel approach positions digital twin technology as the next-generation solution for sustainable ship design and production. By realizing these research objectives, we can contribute significantly to integrating digital and innovative solutions in sustainable ship design and production.

Background

Digitalization and decarbonization are the transformative forces that will shape the future of shipping. Through technologies like Digital Twins (DTs), digitalization has the potential to revolutionize ship design and production, thereby advancing decarbonization. Extensive literature reviews, however, suggest that the application of DTs in ship design and production is nascent. Studies involving ships designed entirely with DTs are notably lacking, presenting the maritime industry with the significant challenge of establishing a new design methodology for ships using DT technology. This challenge extends to the broader manufacturing industry, where new design processes are evolving slowly and implementing DTs in ship production is an emerging, complex concept.

Crossdisciplinary collaboration

A collaboration has been established between the KTH Center for Naval Architecture at the Department of Engineering Mechanics and the Production Logistics Research Group at the Department of Production Engineering. This multidisciplinary team, comprising recognized experts in ship design and production, is exceptionally positioned to lead the digitalization of sustainable ship design and production.

About the project

Objective

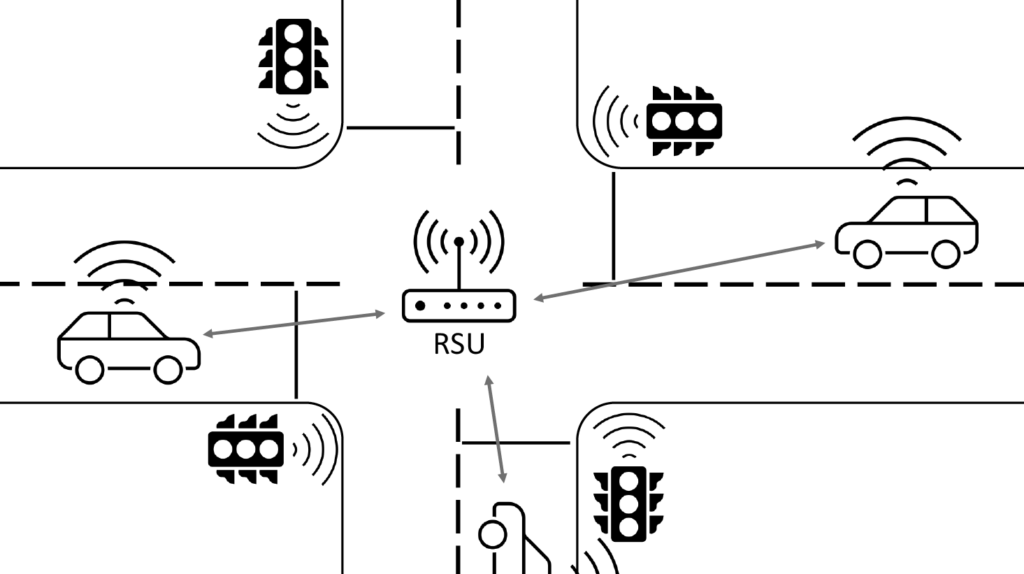

GPARSE aims to mitigate the harm of collisions on road intersections proactively and reactively using edge infrastructure. The proactive approach orchestrates traffic arrival at the intersection using a novel concept of safety zones to avoid collisions. If a collision is nevertheless likely, the reactive approach enables the roadside unit to provide resources to the affected vehicles. This includes contingency path planning considering the safety zones and aggregated sensor data of the intersection for a complete view that cannot be obtained by the vehicles’ sensors alone. To achieve this, GPARSE will focus on nominal and contingency path planning, considering the novel concept of safety zones and the available information the roadside unit provides. This must be done to guarantee timely interaction between the different actors at the intersection. Therefore, GPARSE will develop techniques to allow the roadside unit to provision computation resources for the different types of workloads that emerge from sensors, pro-active tasks and reactive tasks so that the different timing constraints of the workloads are met. This is complicated by these constraints, often spanning across several chains of different computation tasks and platforms.

Background

Low-speed collisions at intersections are common but can still lead to permanent impairment – in particular for women. Rudimentary driver assistance systems can reduce the severity of injuries by braking, but more advanced mitigation of unavoidable collisions faces several challenges. In particular, no single vehicle has the necessary overview, other traffic that interferes with the situational awareness of driver assistance systems and can be involved in secondary collisions, and the short timeframes involved. Contingency path planning, i.e. the reconfiguration of unavoidable collisions to decrease the resulting harm, must be able to estimate the collision parameters of the involved actors at runtime. This is challenging in the general case and has a large effect on the outcome of the approach. At the same time, computation platforms on vehicles, as well as roadside units, must schedule heterogeneous workloads in such a way that diverse timing constraints are met. Therefore, online orchestration of dynamic and static workloads under temporal constraints across all compute nodes is necessary.

Crossdisciplinary collaboration

The researchers in the team represent the School of Engineering Engineering and Computer Science (ECS), KTH and the School of Industrial Engineering and Management (ITM), KTH.

About the project

Objective

The project aims to tackle several methodological and technical challenges encountered during the first phase of developing digital twins (DT) of the personalized human neuromusculoskeletal system: durable biomass sensor design, real-time modelling, and multiple sensor fusion. Overcoming the abovementioned bottlenecks will vastly improve the reliability and robustness of the DT framework. It can then be a clinical-friendly biofeedback neurorehabilitation platform based on a highly modularized and robust wearable sensor-fusion framework, including the innovative biomass-based ultrasound transparent electromyography electrodes.

Background

It is estimated that 15% of the world’s population lives with one or more disabling conditions. Impaired motor function is one of the major disabilities. Management of a complex disability currently largely relies on rehabilitation. In clinical practice, the supervision and the evaluation of a rehabilitation motion pattern remain a medical and engineering challenge due to the lack of biofeedback information about the effect of the rehabilitation motion on individual human biological tissues and structures. Digital twins (DT) is one of the most important concepts in digitalization, integrating all data, models, and other information that allows us to monitor the current states of a real system, e.g., a human musculoskeletal system in the current context. Among others, reliable and wearable sensor data fusion is critical in accomplishing the workflow of DT. The recent development of epidermal electronics offers a promising alternative. In particular, natural wood-derived nanocellulose shows promise in epidermal electronics for simultaneous dual signals collection due to biocompatibility, excellent mechanical properties, high water retention, and great potential for multi-functionalization.

Crossdisciplinary collaboration

This project brings expertise within biomechanical modelling, medical imaging, artificial intelligence and wood nanoscience, wood nanoengineering, and biomaterials design, involving researchers from the Department of Engineering Mechanics at KTH SCI and the Department of Fibre and Polymer Technology at KTH CBH.