About the project

Objective

This project aims to engineer the rules by which intelligent robots interact with each other in public spaces. In particular, this project focuses on tools from the field of mechanism design, which strives to set rules for interaction between rational agents. Designing rules via mechanism design allows for systems where robots collaborate toward global goals, even when their individual goals and specifications differ. Mechanism design, coupled with tools from formal methods and planning, can help achieve global goals like safely sharing resources, minimizing the risk of failures in uncertain systems, and engendering the trust of humans.

Background

Multi-robot planning is a complex optimization problem that must consider both the goals of each robot and the interaction between those goals. The first step toward solving this problem requires understanding the realities of modern-day robots. The second step requires meta-reasoning through game theory. This problem becomes more complicated with the introduction of humans, who interact with robots in unique and often unpredictable ways.

About the Digital Futures Postdoc Fellow

Anna Gautier is a postdoctoral researcher in artificial intelligence and robotics. She works in the Robotics, Perception and Learning division at KTH Royal Institute of Technology. Anna obtained her PhD from the University of Oxford in July 2023, with a thesis entitled “Resource Allocation for Constrained Multi-Agent Systems.” Her research interests include multi-agent systems, human-robot interaction, planning under uncertainty, formal methods and mechanism design.

Main supervisor

Jana Tumova, Associate Professor at the Department of Robotics, Perception, and Learning at KTH Royal Institute of Technology, Digital Futures Faculty

Co-supervisor

Iolanda Leite, Associate Professor at the Department of Robotics, Perception, and Learning at KTH Royal Institute of Technology, Digital Futures Faculty

Watch the recorded presentation at the Digitalize in Stockholm 2023 event

About the project

Objective

The overall objective is to develop and evaluate AI-based and classical optimization (mathematical programming) approaches for sensor placement and control, data processing, communication, and motion planning.

This project aims to develop novel algorithms and computational methods for optimal planning, deployment, and operations of a network of AUVs and sensors in undersea environments. These algorithms will focus on optimizing the placement of sensors and movement of AUVs across the region, paying attention to relevant objectives including coverage and communication robustness. They will also focus on processing locally collected AUV measurements (e.g., sonar data) for situational awareness tasks (e.g., the emergence of an adversarial threat). The project will consider classical model-based approaches, AI/ML approaches, and hybrid approaches to developing these algorithms, comparing and contrasting them under different environmental settings and dynamics.

Of particular interest in this project is coordinating sensors and fleets of autonomous underwater vehicles (AUVs) to patrol regions of the ocean. However, these settings pose unique challenges in placement/motion planning such as limited communication, computations, and data processing. The communication challenges are mainly dealt with in a second sub-project led by the researchers at Purdue University.

Background

Surveillance systems are becoming increasingly reliant on the ability of autonomous networked agents to conduct intelligence, surveillance, and reconnaissance (ISR) tasks. Much recent effort has been devoted to AI/ML-based approaches for augmenting such systems, though pinpointing exactly when AI/ML gives clear-cut advantages over traditional optimization and analytics-based is still an open question. Moreover, while many existing efforts in autonomy are focused on systems of drones, sensors, and other vehicles that operate above the surface, little attention has been paid to undersea ISR settings. The project focuses on the undersea setting, bringing together expertise from different fields, and forms a new line of collaboration between KTH, Purdue University, and Saab.

Crossdisciplinary collaboration

This project is part of a larger collaboration between Saab, KTH, and Purdue University. The project focuses on developing novel algorithms and computational methods for, planning, deploying, controlling, and operating a network of AUVs and different types of sensors over contested undersea environments.

The project brings together expertise from Applied Mathematics, Optimization, Electrical Engineering, and expertise in Underwater Environments.

Participating in the project:

- PhD student at KTH (recruitment ongoing)

- Jan Kronqvist, KTH, PI

- Roger Berg, Saab, PI

- Per Enqvist, KTH, co-PI

Collaborators in the larger project:

- Christopher Brinton, PI, Purdue University

- Shreyas Sundaram, co-PI, Purdue University

About the project

Objective

The ALARS project aims to drastically improve how Unmanned Underwater Vehicles (UUVs) are deployed and recovered in maritime operations. Currently, UUV launch and retrieval rely on manual, time-consuming, and high-risk methodsinvolving surface vessels and human intervention. ALARS introduces an autonomous aerial solution that integrates drone (UAV) technology with UUV operations, significantly enhancing efficiency, safety, and scalability in underwater missions.

Key features of ALARS:

- UAV-Based UUV Deployment – Autonomous drones will carry and release UUVs precisely at mission locations.

- Automated Recovery System – UAVs equipped with an advanced winch mechanism will retrieve UUVs from the water without requiring human intervention.

- AI-Powered Navigation & Stability Control – The system leverages machine learning algorithms for object detection and dynamic stability during deployment and retrieval.

- Multi-UUV Operations – ALARS supports the simultaneous launch and recovery of multiple UUVs, significantly boosting operational capacity.

By automating these processes, ALARS aims to reduce human risk, increase mission success rates, and unlock new capabilities in maritime security, environmental monitoring, and offshore industries.

Background

Modern UUV operations are essential for naval intelligence, surveillance, reconnaissance (ISR), environmental monitoring, and subsea exploration. However, traditional deployment and recovery methods rely on mothership-based handling, which presents multiple challenges:

- Operational inefficiency – Manual operations take time and limit real-time responses.

- Safety concerns – Harsh weather and ocean conditions pose risks to human operators.

- Limited scalability – Only one UUV can be deployed or retrieved at a time.

ALARS directly addresses these limitations by integrating aerial robotics with autonomous underwater systems, allowing for:

- Faster and more flexible UUV deployment and recovery.

- Reduced risk to human operators by eliminating manual handling.

- Multi-domain autonomy with real-time AI-powered decision-making.

By leveraging Sweden’s expertise in robotics, AI, and autonomous systems, ALARS sets a new global benchmark for efficient and safe maritime operations.

Crossdisciplinary collaboration

The ALARS project brings together experts in:

- Autonomous Underwater Systems – UUV development and deployment strategies (KTH, SMaRC)

- Artificial Intelligence & Machine Learning – AI-driven target detection and real-time decision-making (KTH)

- Maritime Defense & ISR Operations – Application-specific design for naval and offshore use cases (Saab Kockums)

The project is a collaboration between KTH, Saab Kockums, and SMaRC, with direct industry involvement to ensure real-world validation and future deployment.

Principal Investigators (PIs)

- Ivan Stenius (KTH, Project Lead)

- John Folkesson (KTH, AI & Machine Learning Integration)

- Petter Ögren (KTH, Multi-Agent Coordination and Control Systems)

About the project

Objective

The Digital Futures Drone Gymnasium explores the potential of physical and embodied training accessories to support drone programming and their interactions with humans. The project sits at the intersection of mobile robotics, autonomous systems, machine learning, and human-computer interaction, providing tools to study and envision novel relationships between humans and robots.

Training accessories are tools that allow us to better understand how drones can be effectively operated in work and living spaces. Our training accessories physicalise the control mappings of our machines, which results in the distribution of the cognitive load of controlling a drone over the whole body.

Background

The project follows in the footsteps of the earlier DF Demonstrator Project Drone Arena and largely involves the same research team. The PIs are at the forefront of their respective research fields and provide a unique and complementary combination of expertise. The multiple awards and recognitions obtained by Prof. Luca Mottola in the field of aerial drones provide a stepping stone for technical work. Prof. Kristina Höök pioneered a design philosophy named Soma Design of relevance to designing interactions with autonomous or semi-autonomous systems, such as drones.

The drone manufacturer Bitcraze, based in Malmö, supports the project and provides a much-needed industry perspective. Dr. Joseph La Delfa, who was previously part of this research team and is now an industrial post doctoral researcher at Bitcraze, will act as a liaison between the Drone Gymnasium and the company. The project is also supported by Rachael Garrett, a PhD candidate at KTH whose research explores ethics in the design of autonomous systems. She also acts as an international collaborator with the Turing AI World-Leading Fellowship Somabotics: Creatively Embodying AI.

Crossdisciplinary collaboration

Prof. Mottola is an expert in mobile robotics and autonomous systems. He focuses on the concrete realisation of the training accessories across hardware and software. Prof. Kristina Höök is a professor in interaction design, specialising towards designing for movement-based interactions between users and autonomous or semi-autonomous systems.

The expertise of the two PIs join in the organisation of the workshops and interactive exhibitions. Successfully accomplishing the project goals, especially related insights from the workshops that might transfer to other application domains will be blending Höök’s skillset with the system expertise of Mottola.

The project collaborates with Bitcraze. Bitcraze AB is a Swedish company creating open-source hardware and software for drone and robotics development. Founded in 2011 by the team behind the Crazyflie nano quadcopter, Bitcraze builds tools that empower people to explore, innovate, and learn through technology. Learn more at bitcraze.io

About the project

Objective

Mediverse will showcase how clinical and medical researchers can integrate multimodal health data into a unified knowledge graph. Our showcase will give a live example of advanced data exploration, complex medical searches, and predictive analytics across diverse modalities, such as genomic data, clinical studies, and medical imaging. Leveraging Graph Neural Networks (GNNs) and black-box uncertainty estimation, Mediverse aims to also establish trust in AI-driven healthcare insights, accelerating medical discovery while ensuring transparency and reliability, two instrumental needs in the health sector.

Background

Health data is typically fragmented and siloed across various institutions and data formats, making integration and large-scale analysis highly challenging. Despite regulatory efforts, the problem tends to worsen over the years. At the same time, existing approaches to interoperability struggle to capture the full spectrum of clinical relationships across different medical domains. Mediverse addresses these challenges by embracing data diversity. At its core, our method is based on a unified future-proof hierarchical model built using knowledge graph representations that link diverse healthcare datasets, enabling seamless interaction and in-depth exploration. By incorporating state-of-the-art graph representation learning techniques, Mediverse facilitates cross-ontology mapping, providing a powerful tool for clinicians and researchers to uncover previously inaccessible insights.

Crossdisciplinary collaboration

Mediverse is a collaboration between KTH’s Data Systems Lab (EECS) and the Center for Data-Driven Health (CBH). It brings together valuable expertise in health informatics, knowledge graph modeling, machine learning, and data systems engineering. The project is further strengthened by partnerships with Karolinska Institute, Region Stockholm, and the private sector, enabling real-world validation and deployment within Sweden’s healthcare ecosystem.

About the project

Objective

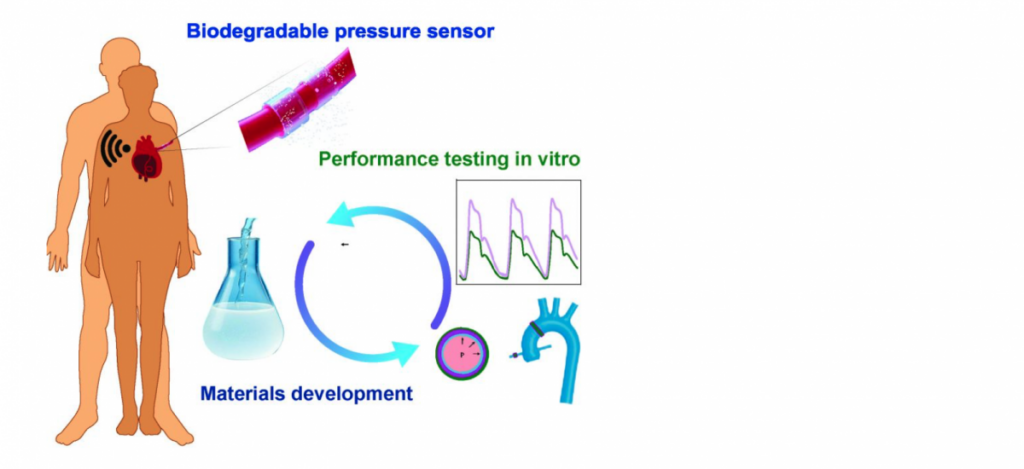

The project aims to develop a self-powered biodegradable pressure sensor with the potential for wireless data transmission that is tested in vitro under conditions that mimic the in vivo environment of physiological blood flow. The pressure sensor is based on the self-powered triboelectric nanogenerator technology and will combine components that enable high performance and on-demand biodegradation. Sensor validation will be enabled by a hybrid mock circulatory loop: an in vitro system that simulate the dynamics of the healthy and pathological patient’s circulatory system. The method will enable to validate sensor-generated pressure signals against reference pressures generated by a digital patient representation.

Objective

The project aims to develop a self-powered biodegradable pressure sensor with the potential for wireless data transmission that is tested in vitro under conditions that mimic the in vivo environment of physiological blood flow. The pressure sensor is based on the self-powered triboelectric nanogenerator technology and will combine components that enable high performance and on-demand biodegradation. Sensor validation will be enabled by a hybrid mock circulatory loop: an in vitro system that simulate the dynamics of the healthy and pathological patient’s circulatory system. The method will enable to validate sensor-generated pressure signals against reference pressures generated by a digital patient representation.

- Erica Zeglio, Assist. Prof. in organic bioelectronics / materials chemistry at the Department of Chemistry at SU, is an expert in materials with ionic and electronic conductivity and their application in bioelectronic devices.

- Seraina Dual, Assist. Prof. in Intelligent Health Technologies at the Department of Biomedical Engineering and Health Systems at KTH, is an expert in implantable sensors and robotic systems for prevention and treatment of cardiovascular disease.

About the project

Objective

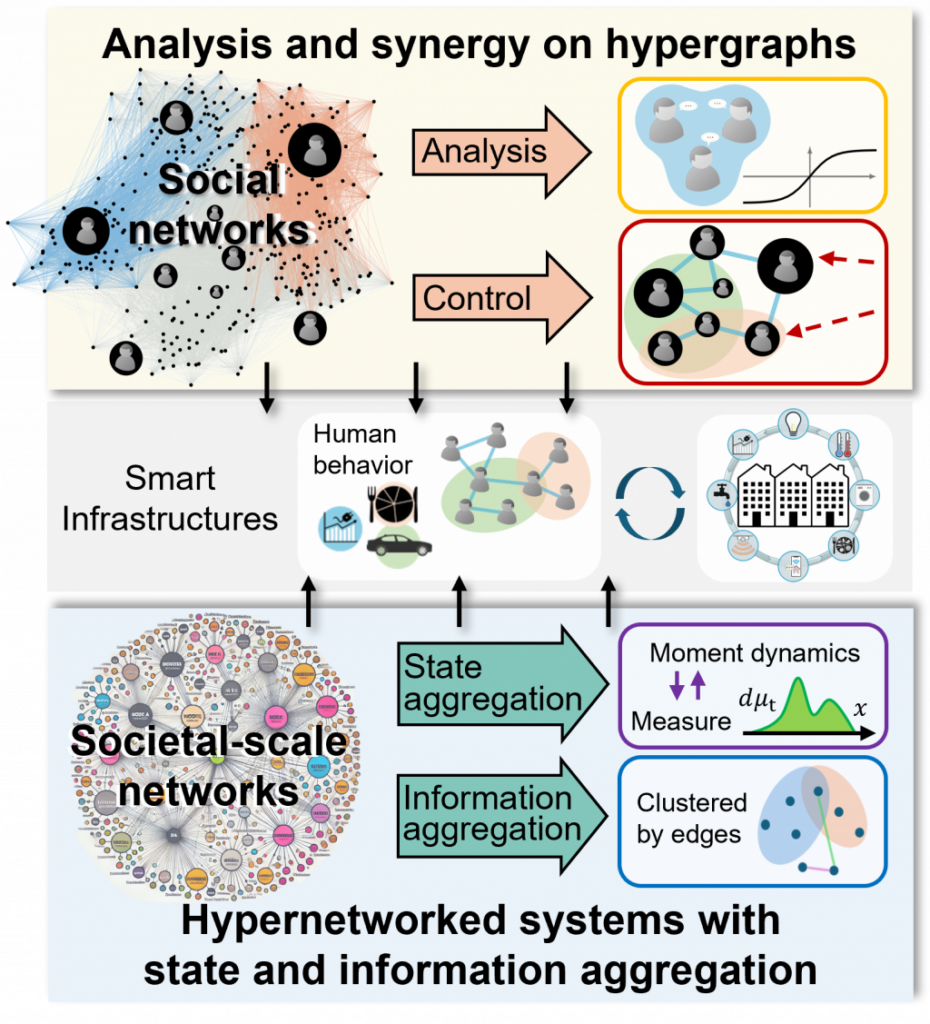

The project aims to develop analysis and synergy mechanisms for complex systems consisting of high-order interactions, with a particular focus on opinion and societal-scale dynamics on hypernetworks. The ultimate goal is to apply the methodology and insight derived from collective behaviors of social dynamics on hypernetworks and moment-based approaches in societal-scale networked systems to design more efficient and sustainable infrastructures. These include transportation systems, smart grids, and smart buildings, where human decisions and group interactions are pivotal.

Background

In recent years, there has been a growing interest in studying the collective behavior of systems with higher-order interactions. The motivation stems from a practical need in real-world applications where the phenomena observed in complex systems cannot be adequately captured by considering only pairwise interactions between agents. Instead, these systems require the inclusion of higher-order interactions, often represented by hypergraphs or simplicial complexes. Such a need is evident in numerous applications, ranging from neuron dynamics to protein interaction networks, and from ecological systems to social systems. Understanding how these high-order interactions affect collective behaviors in social dynamics and incorporating their effects into human-involved infrastructures is greatly needed.

Crossdisciplinary collaboration

Our research team is formed by PIs from two KTH Schools, Angela Fontan (KTH/EECS) and Silun Zhang (KTH/SCI). The project team will also include a postdoctoral researcher with a strong background and interest in networked systems, control and systems theory, optimization, and large-scale system modeling.